I still remember the day our “tiny” model rollout turned into a full-blown incident review—latency spiked, costs followed, and suddenly everyone cared about data lineage like it was a fire alarm. That was my wake-up call: AI doesn’t just improve models; it reshapes the entire operating system around data science. In this post, I’m mapping the real, slightly messy operational upgrades that actually help when AI moves from demo to default.

From Training to Inference: The Quiet Flip

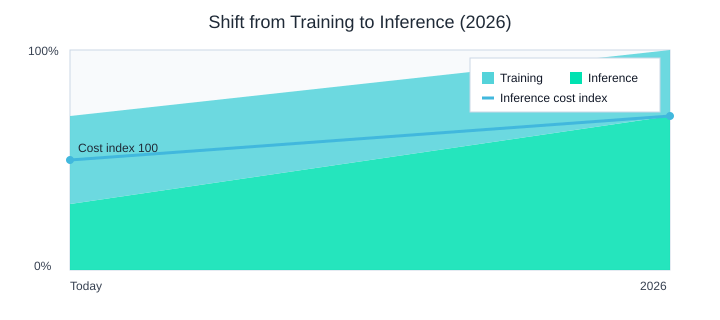

My “oops” moment was simple: I celebrated faster training runs, then realized inference workloads cloud deployment would dominate the bill, the pager, and the user experience. In 2026, many orgs are making the same shift—moving from training-heavy work to cloud-native, large-scale inference. The workload mix I now plan for looks like this: training drops from 70% to 30%, while inference climbs from 30% to 70%. That’s where the AI operations wall shows up: traffic grows, and suddenly every small inefficiency becomes a cliff.

“In most enterprises, inference—not training—becomes the defining operational workload, because that’s where users feel latency and finance feels cost.” — Cassie Kozyrkov

When inference becomes the main event

Training is bursty; inference is constant. The targets also tighten: I’ve seen p95 latency go from 250ms in a pilot to a 120ms production SLO. Throughput matters, too, because one endpoint can turn into many products. On-call load rises as enterprise AI implementations scaling adds more dependencies, and release cadence flips from weekly to daily. (Informal aside: the dashboards looked “fine” until the bill arrived.)

Checklist: capacity, autoscaling, and Cost controls infrastructure management

- Capacity planning: forecast QPS, model size, and peak windows; reserve baseline capacity for SLOs.

- Autoscaling: scale on concurrency and queue depth, not just CPU; test cold-start paths.

- Cost controls: per-endpoint budgets, right-size instances, set alerts on cost/QPS, and cap runaway retries.

Why Cloud operations autonomy automation matters

As AI infrastructure scaling adoption grows, teams can’t wait in a central platform queue to ship fixes. I push for self-serve deploys, policy-based guardrails, and automated rollbacks so inference stays reliable while cloud deployment scales.

| Metric | Pilot | Scaled |

|---|---|---|

| Workload mix | Training 70% / Inference 30% | Training 30% / Inference 70% |

| p95 latency target | 250ms | 120ms |

| Inference cost curve | +40% MoM (first 3 months without controls) | |

| Deployment frequency | Weekly | Daily |

Decoupled Observability Stacks Rise (and why I’m relieved)

A quick confession: I used to want “one platform to rule them all” until my logs/metrics/traces bill did a jump-scare. After our GenAI feature launch, telemetry didn’t just grow—it multiplied. That’s why I’m cheering as Decoupled Observability Stacks Rise, especially for Enterprise AI implementations scaling where every new endpoint, prompt, and agent tool call creates more data.

What decoupled observability platforms data means in practice

When I say decoupled observability platforms data, I mean we stop treating observability like a single boxed product. We split it into layers:

- Routing/collection: agents, gateways, pipelines decide what flows where

- Storage: hot vs cold tiers (example: 30 days hot / 180 days cold)

- Query: tools can change without rewriting storage choices

“Observability is a design problem first and a tooling problem second; decoupling lets you design for change instead of locking into it.” — Charity Majors

How AI-driven growth breaks monolith tools

Monolith platforms struggle when AI traffic spikes create (1) cardinality explosions from messy tags, (2) longer retention needs for audits, and (3) “surprise queries” from incident response. That’s where AI operations bottleneck governance shows up: finance asks for cost control, security asks for visibility, and ops gets stuck in the middle.

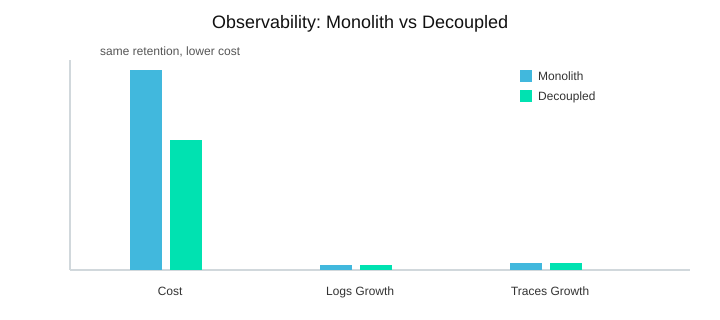

Illustrative numbers from our rollout: Logs +3x, Metrics +2x, Traces +4x. Cost at the same retention: Monolith $120k vs Decoupled $78k.

My survival kit: sampling, guardrails, and visibility SLAs

- Sampling strategies: keep 100% of errors, sample “healthy” traces

- Budget guardrails: per-team caps and alerts before runaway spend

- Visibility SLAs: what must be searchable in hot storage, and for how long

Mini tangent: one weird trace saved a release. A single outlier span showed an agent retry loop. We fixed it fast—and later reduced high-cardinality tags from 120 → 35 with schema hygiene.

| Item | Example |

|---|---|

| Telemetry growth | Logs +3x, Metrics +2x, Traces +4x |

| Monthly cost | Monolith $120k vs Decoupled $78k (same retention) |

| Retention policy | 30 days hot / 180 days cold |

| High-cardinality tags | 120 → 35 |

Hybrid AI Architecture Enterprise: SLMs + RAG at the Edge

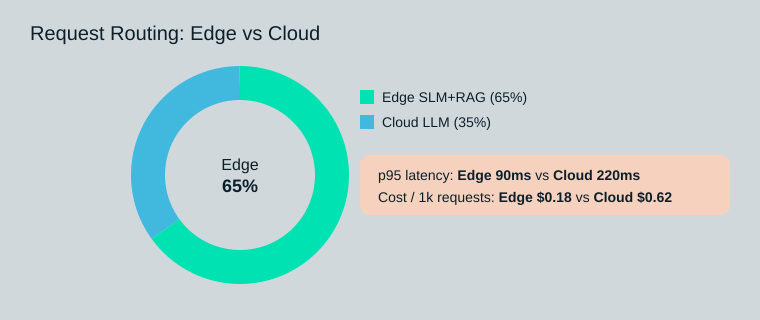

In my Data Science Ops work, I stopped chasing the biggest model for every use case. Frontier LLMs are powerful, but cost and latency add up fast in real systems. What worked better was a Hybrid AI Architecture Enterprise approach: route most requests to Small Language Models SLMs plus Retrieval-Augmented Generation RAG systems at the edge, and send only the hard cases to the cloud.

“The winning architecture is rarely one model—it’s a system that retrieves the right context and uses the smallest competent model for the job.” — Andrew Ng

Why I moved from “one big model” to a split system

I think of SLMs as the reliable hatchback: cheap to run, easy to deploy, and good for most daily tasks. Frontier LLMs are the race car: great when you truly need it, but not for every trip. Research insights match what I see in ops: hybrid setups (SLM + RAG at the edge) are becoming the enterprise default to offset rising costs and latency.

Retrieval-Augmented Generation RAG systems: grounded answers, survivable audits

RAG keeps responses tied to approved documents. That makes audits easier and reduces hallucinations. In ops, I watch three things: cache hit rate, vector DB freshness, and retrieval evaluation (did the model cite the right chunks?). My freshness SLA example is simple: documents updated within 15 minutes.

Model efficiency inference edge: fewer round-trips, faster and safer

With model efficiency inference edge, I get latency wins, privacy wins, and fewer cloud calls. Picture a hospital kiosk or a factory line: waiting 220ms p95 can feel slow; 90ms p95 feels instant.

| Metric | Edge SLM+RAG | Cloud LLM |

|---|---|---|

| Architecture split (illustrative requests) | 65% | 35% |

| Latency (p95) | 90ms | 220ms |

| Cost per 1k requests | $0.18 | $0.62 |

| Retrieval freshness SLA | Docs updated within 15 minutes | |

AI Operations Wall Companies Hit (and how I’d spot it earlier)

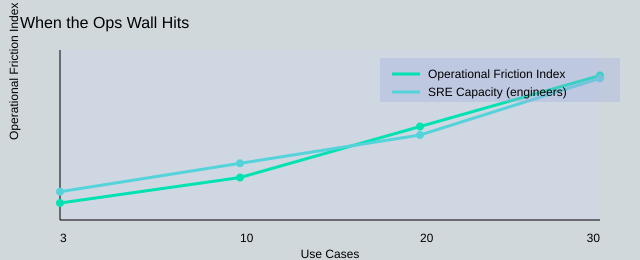

The pattern I’ve seen is simple: pilots are cute; production is political. In one meeting, someone used “pilot” as a synonym for “no rules yet,” and everyone nodded. That’s the early smell of the AI operations wall companies hit when they move from isolated wins to Enterprise AI implementations scaling across finance, manufacturing, or healthcare.

Where the wall shows up (usually first)

- Access controls: who can see prompts, features, and outputs?

- Data contracts: what breaks when upstream tables change?

- On-call ownership: who gets paged at 2 a.m. for model drift?

- “Who approves this model?”: security, legal, risk, and audit all want a say.

Illustrative scale threshold: going from 3 pilots → 30 production use cases is where friction spikes and “just ship it” stops working.

“Reliability isn’t a feature you bolt on later; it’s the cost of admission once AI leaves the lab.” — Liz Fong-Jones

Why AI SRE teams scaling is the hidden unlock

When cloud-native and generative AI workloads land on Kubernetes, AI SRE teams scaling becomes the real constraint. I’ve watched it jump from 1 MLE on-call → an AI SRE pod of 5 within 2 quarters, because you need Kubernetes depth, runbooks, and incident muscle—not hero debugging.

This is also where AI factories infrastructure development gets real: clusters grow fast (e.g., 20 → 80 nodes during rollout), and queueing becomes a bottleneck. Cloud-native job queueing like Kueue is getting adopted because it cuts wait time (e.g., 45 min → 12 min) for shared HPC/AI workloads.

A pragmatic escalation ladder

- Model owner: quality, drift, and retraining triggers

- Platform owner: pipelines, Kubernetes, queues, SLOs

- Risk owner: approvals, audit trails, policy exceptions

| Signal | Illustrative change |

|---|---|

| Ops wall trigger | 3 pilots → 30 production use cases |

| Team shift | 1 MLE on-call → AI SRE pod of 5 (2 quarters) |

| Cluster growth | 20 → 80 Kubernetes nodes |

| Queue improvement (Kueue) | 45 min → 12 min wait |

Data Governance Solidified AI: Boring work, outsized payoff

My unpopular opinion: Data Governance Solidified AI is the fastest path to moving quicker later. In the source work, the pattern was clear: teams that tried to scale from pilot to production without solid governance hit the same wall—no reliable model accuracy checks, weak data quality verification, and fuzzy lineage mapping.

MLOps data governance frameworks that actually stick

The MLOps data governance frameworks I’ve seen work are not huge rulebooks. They are a few “always-on” controls baked into pipelines:

- Lineage: dataset version, feature set, training run, and deployment link.

- Approvals: lightweight sign-off for new data sources and schema changes.

- Model accuracy checks: baseline comparisons, drift checks, and rollback rules.

Small tangent: the first time I tried to audit a model, I realized I couldn’t reproduce the dataset. Same table name, different rows. That’s when governance stopped feeling “nice to have” and started feeling like oxygen.

“Governance done right is a product: it reduces cognitive load and makes the safe path the easy path.” — Hilary Mason

Data quality generative AI: shifting focus from output to input

One research insight I’m betting on: in 2026, data quality generative AI shifts attention from “better answers” to “better inputs.” Practically, I use GenAI to:

- Classify fields (PII, financial, operational) automatically.

- Detect inconsistencies (unit mismatches, invalid categories, missing joins).

- Enrich metadata (descriptions, owners, freshness, allowed values).

Data mesh operating models vs centralized control: where I’d draw the line

I like data mesh operating models for domain ownership, but I keep central control for: identity/access, shared definitions, and audit-grade lineage. Domains can move fast—if the guardrails are consistent.

| Metric | Before | After |

|---|---|---|

| Governance maturity (illustrative) | Stage 1 ad hoc | Stage 4 policy-driven |

| Lineage coverage target (6 months) | 40% | 90% |

| Data quality checks per pipeline | 5 | 18 |

| Metadata enrichment throughput | 500 fields/day | 3,000 fields/day |

Governance & Data Quality Gains

Lineage Coverage

Checks per Pipeline

Metadata Fields/day

Before

After

Wild Card: Agentic Operating System Foundation (the fun, risky part)

In my notes from Data Science Operations Enhanced with AI: Real Resultsundefined, the most exciting idea is an Agentic Operating System Foundation: agents that don’t just answer questions, but run parts of ops. I think of them as interns with superpowers—and zero context unless you give it.

A hypothetical day in 2026 (coffee included)

I open my laptop and an agent has already opened a ticket, queried the right tables, drafted a fix, and posted a pull request. Then it pings me: “Approve write actions?” I sip coffee (nervously) and review the diff, the logs, and the rollback plan before I click yes.

Agentic AI complex workflows: planning, tool calls, handoffs

This is where Agentic AI complex workflows show up: the agent plans steps, calls tools, and hands work to other agents when scope grows. Research trends suggest these systems will move from single-purpose helpers to multi-functional agents that can reason, plan, and complete complex tasks.

- Multi-agent workflow steps: 4 → 9 as scope grows

- Tool calling + planning + review gates

Agent behavior policy-driven schemas (guardrails)

I only trust agents when Agent behavior policy-driven schemas are strict: explicit contracts, hardened releases, tool permissions, and audit logs. Otherwise they might “helpfully” delete the wrong thing.

- 5 read-only tools

- 2 write tools with approval

- Risk controls checklist: 3 (pilot) → 12 (production)

“Agents will be useful the moment we treat them like software: versioned, observable, and constrained by explicit contracts.” — Chip Huyen

Team workflow orchestration automation: what I’d automate first

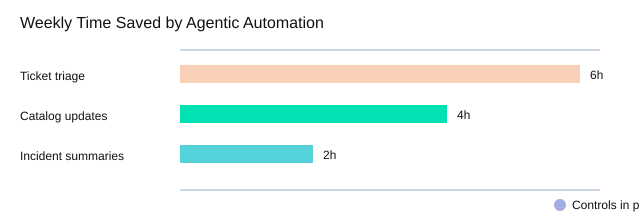

For Team workflow orchestration automation, I’d start with the boring, repeatable wins:

| Automation candidate | Illustrative impact |

|---|---|

| Ticket triage | 6 hrs/week saved |

| Data catalog updates | 4 hrs/week saved |

| Incident summaries | 2 hrs/week saved |

Platform Modernization AI Literacy: The people part I underestimated

In my “AI-Boosted Data Science Ops: Real-World Wins” work, I assumed platform modernization would be the hard part. I was wrong. The surprise was simple: tooling wasn’t the bottleneck—shared language was. We could ship pipelines and guardrails, but teams still talked past each other about prompts, model limits, and what “good enough” meant. That gap created more shadow deployments than any missing feature.

AI literacy training programs for everyone (not just engineers)

We treated Platform Modernization AI Literacy like data literacy: essential at every level to reduce change fatigue and build sustainable AI capability. Our AI literacy training programs focused on three basics: how to write and test prompts, how to spot risk (privacy, leakage, bias), and how to question outputs instead of trusting them.

“AI literacy isn’t about turning everyone into an engineer; it’s about giving everyone the confidence to ask better questions and spot risk.” — Fei-Fei Li

One moment made it real. A non-technical teammate in customer ops flagged that a draft evaluation set included fields that could reveal account identity. Because they’d been trained, they recognized data leakage risk before it hit production. That single catch saved weeks of rework—and a lot of awkward conversations.

More All-In Adopters: pacing change without burnout

For More All-In Adopters, the lesson is pacing. We measured “change fitness” with a quick pulse score. After role-based training, the change fatigue pulse moved from 8 → 6. People weren’t just compliant; they felt capable.

Key Themes Leaders Watch: my quarterly scorecard

In the next review, I’d track completion, fatigue, and shadow AI. With governance plus literacy, shadow AI incidents dropped 9 → 3 per month—proof that operating model + training beats policing.

| Item | Illustrative target / result |

|---|---|

| Training levels | Basics, Practitioner, Steward |

| Completion targets | 60% (M2), 85% (M4), 95% (M6) |

| Change fatigue pulse (1–10) | 8 → 6 after role-based training |

| Shadow AI incidents/month | 9 → 3 after governance + literacy |

AI Literacy Rollout & Risk Reduction

Months (0, 2, 4, 6)

Completion % / Shadow incidents

0

2

4

6

Completion %

Shadow incidents

My closing takeaway: platform work scales when people do. When literacy becomes part of the operating model, modernization stops being a one-time project and turns into a steady, safer way of working.

TL;DR: AI is pushing data science ops from training-centric to inference-first. The biggest wins come from hybrid architectures (SLMs + RAG), decoupled observability, AI-SRE capability, and hardened governance + data quality programs—before agentic workflows make complexity explode.