I used to collect executive programmes the way some people collect tote bags: proof I’d “been there,” not proof anything changed back at work. Then a colleague asked me a brutally simple question after one AI session: “So… what are we doing differently on Monday?” That question (and my lack of an answer) is why I now treat any AI masterclass as a strategy lab: two days to cut hype, map leadership priorities, and leave with an AI adoption plan that survives the first budget meeting. In this post I’m outlining the Masterclass Leadership AI Strategy Guide I wish I’d had—complete with the awkward trade-offs, the human advantage bits AI can’t replace, and a couple of wild-card experiments to keep it real.

1) Why I treat an AI masterclass like a strategy sprint (ai masterclass + leadership impact)

I don’t treat an AI masterclass as training. I treat it like a strategy sprint, because leadership teams don’t need more information—they need decisions that change what happens next week. I use what I call my Monday test: if the programme can’t change Monday’s agenda, it’s entertainment. That one rule keeps the focus on leadership impact, not AI hype.

My “Monday test” for leadership teams

Before the first session, I look at my calendar and ask: what meeting, metric, or priority should be different because of this masterclass? If the answer is “nothing,” then we’re not doing strategy—we’re collecting trivia. In a real sprint, every hour should move a decision forward: where we invest, what we stop, and what risks we accept.

One strategic insight per day (that I can defend)

I set a personal goal: one strategic insight per day that I can defend in the C-suite. Not “AI is changing everything,” but something specific like: “This workflow is a bottleneck, and we can redesign it,” or “This customer promise needs a human-in-the-loop.” If I can’t explain it clearly, it’s not ready for leadership.

AI as a forcing function, not a tool hunt

The AI revolution is useful because it forces a review of leadership priorities. I use the masterclass to pressure-test basics: accountability, decision rights, risk appetite, and how we measure value. Tools come later. Strategy comes first.

Mini-exercise: the human advantage list

I ask the team to write down three decisions that only humans should own. This becomes our “human advantage list.”

- Values trade-offs: what we will not do, even if it is profitable.

- High-stakes judgment: decisions with legal, safety, or reputational risk.

- Trust moments: customer or employee conversations where empathy matters.

Wild card: the Financial Times op-ed test

I like a strange prompt: imagine you’re writing a Financial Times op-ed in 90 days about your AI strategy. What would you need to prove? I usually hear: measurable outcomes, clear governance, and real examples—not demos.

Quick tangent: the relief of “we don’t know yet”

One of the best moments in an AI masterclass is when a leadership team says, “We don’t know yet.” It sounds weak, but it’s often the start of honest strategy. It creates space to test, learn, and set boundaries—without pretending certainty we don’t have.

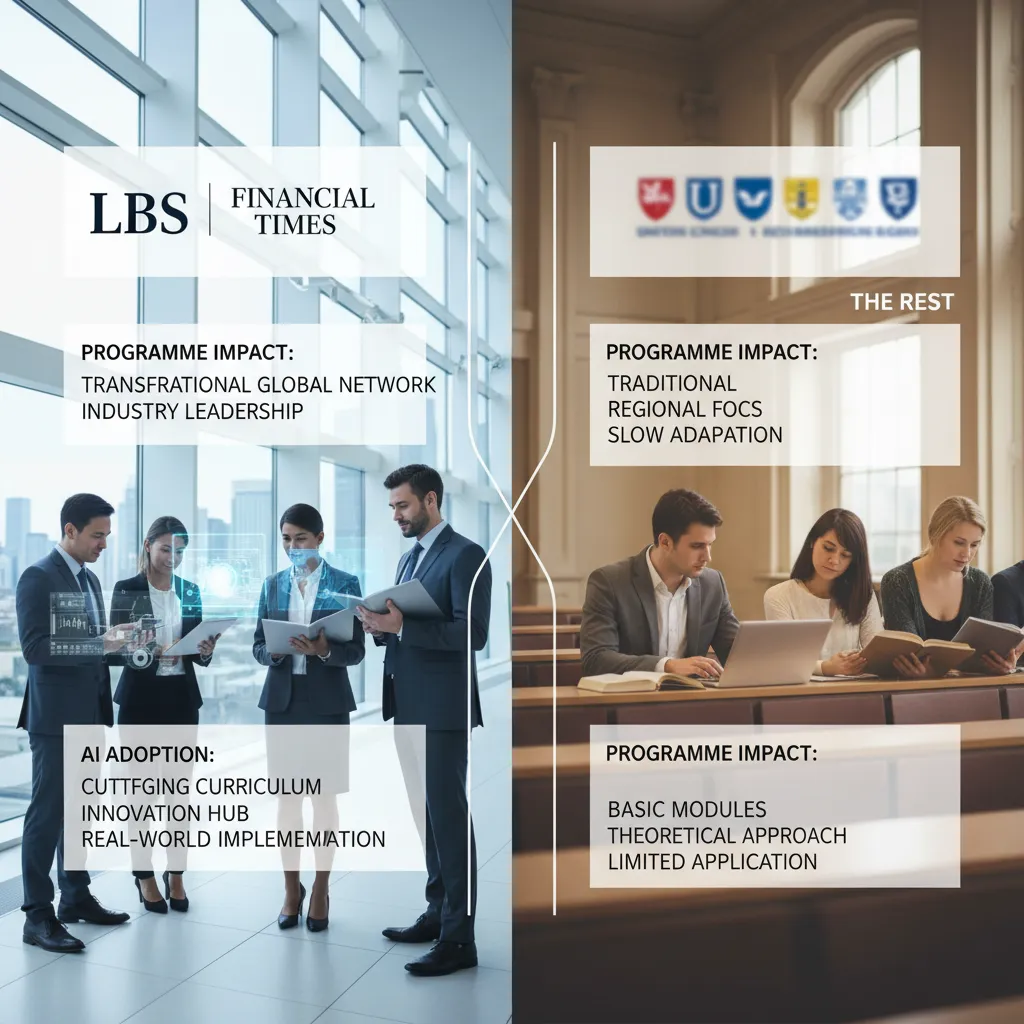

2) Choosing the right programme: LBS + Financial Times vs the rest (programme impact + ai adoption)

When I compare AI programmes, I do it the way a finance partner would: price, hours, outputs, and follow-through. The goal is not “learning AI.” The goal is adoption: decisions made faster, better governance, and teams using GenAI safely in real workflows.

A finance-style comparison: what you buy vs what you get

| Lens | LBS + Financial Times | Most alternatives |

|---|---|---|

| Price | Premium, often justified by senior time saved | Low to mid; easy to approve, easy to ignore |

| Hours | Focused blocks + pre-work; less “content,” more application | More videos, more modules, less pressure to apply |

| Outputs | Shared language, decision frameworks, clearer AI strategy choices | Tips, tools, prompts; uneven link to business outcomes |

| Follow-through | Peer accountability + leadership-level commitments | Depends on self-discipline; drop-off is common |

What you’re really paying for with LBS + Financial Times

In my experience, the premium is not the slides. It’s the peer room and the frameworks. The peer room matters because leaders calibrate risk and ambition by hearing how other firms are handling data, compliance, and operating model change. The frameworks matter because they turn “AI excitement” into choices: where to automate, where to augment, and where to redesign the work.

When a $10/month GenAI series is enough (and when it’s a false economy)

A low-cost GenAI series is enough when you need breadth: basic literacy, common tools, and quick wins like better briefs, faster research, or first-draft content. It becomes a false economy when leaders leave with skills but no system. I watch for these red flags:

- No agreed use cases tied to P&L or risk

- No data rules (what can/can’t go into tools)

- No owner for adoption (training, metrics, governance)

My rule: depth vs breadth

If your business model is in flux, pick depth. You need strategy, operating model decisions, and governance. If skills are the gap, pick breadth. You need many people to reach “good enough” quickly.

Anecdote: the “executive duo” that disagreed

I once sent an executive duo—one commercial lead, one risk lead—to the same programme. They came back with opposite conclusions: one wanted to move fast and scale copilots; the other wanted tighter controls first. That tension was useful. It forced a real leadership trade-off discussion, and we left with a clearer sequence: guardrails first, then scale, with measurable adoption targets.

3) Generative AI, but make it boring: where business value actually shows up (business model + ai strategy)

When I talk with leadership teams about generative AI, I start with a simple business model question: are we trying to grow revenue, cut cost, reduce risk, or move faster? Pick one lane first. If we try to do all four at once, we usually end up with a lot of demos and not much impact. A clear lane also makes the AI strategy easier: it tells us what to prioritize, what to measure, and what to stop.

Map 3–5 “value wedges” where GenAI can actually land

From the Masterclass Leadership AI Strategy Guide, I treat generative AI as a set of practical wedges, not a magic layer over the whole company. I usually map 3–5 wedges and assign an owner for each:

- Support: draft replies, summarize tickets, suggest next steps, improve knowledge base articles.

- Sales enablement: first drafts of outreach, call summaries, proposal outlines, account research briefs.

- Product: help with specs, user story drafts, QA test cases, and customer feedback themes.

- Operations: SOP drafts, meeting notes, internal search, faster reporting and handoffs.

- Compliance / risk: policy Q&A, document checks, audit prep summaries, controlled content generation.

Define what “good” looks like (before the pilot)

Generative AI value shows up when we can measure it in plain terms. I define success with a few simple metrics tied to the lane we chose:

- Time saved per task (minutes per ticket, hours per proposal)

- Errors reduced (rework rate, missed fields, incorrect claims)

- Cycle time (lead-to-quote, ticket-to-resolution, month-end close)

- Customer satisfaction (CSAT, NPS comments, response quality)

A quick thought experiment to find the real wedge

I ask: if we lost all generative AI tomorrow, which process would hurt most? The answer is usually the process with repeatable work, clear inputs, and a real bottleneck. That’s where we should invest in data access, workflow design, and change management—not just prompts.

Informal aside: the pilot graveyard is real—lots of small tests, no production rollout, no owner, no metrics.

To avoid it, I keep pilots boring: one wedge, one workflow, one metric set, and a clear path to production if it works.

4) Leadership skills in the AI era: the human advantage I can’t automate (human advantage + leadership skills)

In the AI era, my job is not to “out-compute” a model. My job is to lead. The biggest shift I’ve learned from the Masterclass Leadership AI Strategy Guide is that strong AI outcomes come from strong human leadership. AI can draft, predict, and optimize, but it cannot carry responsibility. That part stays with me and my leadership team.

The non-delegables I keep in human hands

When AI enters core workflows, I name the non-delegables up front. These are the leadership skills I can’t automate:

- Judgment: deciding what matters, what trade-offs are acceptable, and when “accurate” is still not “safe.”

- Accountability: owning the outcome, especially when the model is wrong or harms trust.

- Empathy: understanding how decisions land on customers, employees, and communities.

- Context-setting: defining the goal, the boundaries, and what “good” means in our real world.

A human-centered leadership model for AI decisions

I don’t let AI projects float between teams. I assign clear roles so decisions don’t disappear into “the model said so.” My simple operating model looks like this:

- Who decides: the business owner makes the call on use, scope, and go-live.

- Who reviews: a cross-functional group checks data, bias, security, and performance.

- Who owns risk: one accountable executive signs off on risk, controls, and monitoring.

A new meeting rhythm: reviews, incidents, and learning loops

Leadership teams need a cadence that matches how AI behaves in the wild. I build three recurring meetings:

- Model reviews: performance, drift, edge cases, and user feedback.

- Incident reviews: what broke, who was impacted, what we changed.

- Learning loops: what we learned, what we will test next, what we will stop.

“The first time I asked, ‘What would we tell a regulator?’—silence was the answer.”

That moment taught me that leadership is the safety system. My micro-habit now is simple: before any pilot goes live, I write one sentence about ethical guardrails. For example:

We will not automate decisions that deny service without a human review and an appeal path.

5) An AI adoption roadmap I’d actually use (ai adoption roadmap + future proof)

If I’m leading an AI shift, I don’t start with tools. I start with a simple ai adoption roadmap that protects focus, reduces risk, and stays future proof as models change. Here’s the version I’d actually run with a leadership team.

Phase 1 (0–30 days): Align, assign, and set guardrails

In the first month, my goal is clarity. We align on the few leadership priorities where AI can move a real metric, not just “increase innovation.” Then we pick owners and set rules so teams can move fast without creating new risk.

- Align leadership priorities: choose 2–3 outcomes (cost, speed, quality, revenue).

- Pick owners: one executive sponsor, one product/process owner, one technical lead.

- Set guardrails: data boundaries, approval steps, vendor rules, and “no-go” use cases.

I also define what “good” looks like in plain terms: time saved per week, error rate, cycle time, or conversion lift.

Phase 2 (31–90 days): Run 2–3 controlled pilots with measurable value

Next, I run a small set of pilots that are controlled, measurable, and tied to business value. I avoid pilots that require perfect data or major system rebuilds. Each pilot gets a baseline, a target, and a clear stop rule.

- Pilot selection: high volume work, clear inputs/outputs, and a known pain point.

- Measurement: before/after metrics, plus adoption (who used it, how often).

- Risk checks: privacy, bias, security, and human review steps.

Phase 3 (90–180 days): Scale what worked, retire what didn’t, update assumptions

By month six, I treat AI like any other operating change: scale the winners, shut down the rest, and update the business model assumptions that no longer hold (cost-to-serve, staffing mix, speed of delivery, and customer expectations).

The “red team” moment (lovingly)

Before scaling, I assign someone to try to break the plan: find failure modes, hidden costs, and compliance gaps—then we fix them.

Wild card: My 2028 new-hire test

I imagine explaining the strategy to a new hire in 2028. What stays true? We protect customer trust, measure outcomes, keep humans accountable, and design systems that can swap models without rewriting the business.

6) My ‘masterclass membership’ aftercare: how learning turns into thought leadership

In the AI Masterclass Playbook for Leadership Teams, the real value shows up after the sessions end. I treat “aftercare” as a simple system that keeps our AI strategy current and turns learning into calm, credible thought leadership. If we don’t build this loop, the Masterclass Leadership AI Strategy Guideundefined becomes a one-time event instead of an operating rhythm.

A lightweight learning stack that doesn’t overwhelm leaders

My rule is one deep programme plus one ongoing membership or series. The deep programme gives shared foundations: what AI can do, where it fails, and how to govern it. The ongoing membership keeps us updated without chasing every headline. This stack is light enough for busy leadership teams, but strong enough to prevent “random acts of AI.”

From notes to a monthly internal memo

Every month, I turn my notes into a short internal memo. I keep it practical: what changed in the AI landscape, what new risks or rules appeared, and what we are testing right now. I also name what we are not doing and why, because focus is part of strategy. Over time, these memos become a living record of decisions, not just opinions.

Thought leadership with an ethical spine

I borrow a key idea from the IABC Master Class: thought leadership is not louder marketing; it is clearer thinking in public. That means we share what we know, but we also show our limits. I check every message against a basic ethical spine: transparency, privacy, fairness, and human accountability. If a claim needs a footnote, I add it. If a demo hides risk, I say so.

My “AI era glossary” habit

I keep a running glossary so leaders don’t talk past each other. Terms like “agent,” “RAG,” “fine-tuning,” “hallucination,” and “model risk” can mean different things in different rooms. A shared glossary reduces confusion, speeds decisions, and improves cross-team trust.

Closing the loop with one honest case study

To finish the cycle, I share one internal case study—warts and all. What we tried, what broke, what we learned, and what we changed in our process. That honesty is how culture shifts. It turns AI learning into leadership behaviour, and leadership behaviour into real thought leadership people can believe.

TL;DR: Pick the right AI masterclass for your leadership teams, show up with a business model question (not a tech wish list), leave with an AI adoption roadmap, ethical guardrails, and a human-centered leadership rhythm that turns learning into business value.