I’ll never forget the first weekend our dashboard blinked green: conversions spiked and I felt part elated, part suspicious. We had rolled out a handful of AI personalization experiments—targeted emails, live product recommendations, and a subtle ‘vibe’ tweak on mobile. What followed was a steady climb that landed at a 180% conversion increase. In this post I walk you through the messy, human side of building that result: the wins, the dead ends, and the concrete tactics that actually moved the needle.

The Odd Trio: Why I Focused on Email, On-site Recs, and Quizzes

When I first started working on AI personalization, I didn’t begin with flashy tech or a full rebuild of our site. I started with three unglamorous plays that were easy to launch and easy to measure: automated email flows, on-site product recommendations, and a zero-party data quiz. They weren’t exciting on paper, but they gave me fast feedback on what personalization could really do for our conversion rate.

Why these three worked together

I picked this trio because each one hits a different moment in the funnel. Instead of betting everything on one channel, I could test personalization across the full customer journey without blowing the budget or our engineering roadmap.

- Awareness: the quiz pulled people in and gave them a reason to engage.

- Consideration: on-site recommendations helped shoppers compare options faster.

- Purchase: email/drip flows nudged people back when they were close to buying.

Email flows: simple triggers, big leverage

Email was my first stop because it’s controlled, measurable, and cheap to iterate. With AI-driven segments, I could personalize subject lines, product picks, and timing based on behavior—like browsing, cart activity, or past purchases. Even basic flows (welcome, browse abandon, cart abandon) became smarter when the content matched what the customer actually cared about.

On-site recommendations: help people decide

On-site recs were my “in-the-moment” personalization layer. When someone landed on a product page, AI could suggest similar items, better-fit alternatives, or bundles. This mattered because many visitors weren’t ready to buy—they were trying to narrow down choices. Recommendations reduced that effort and kept them moving forward.

The quiz: the surprise growth engine

The zero-party data quiz was the most surprising part. I expected it to be a small tool for better targeting. Instead, it became a customer-facing story people wanted to share.

The quiz didn’t just collect data—it earned it.

Because customers told us their preferences directly, the signals were cleaner than anything we used to get from third-party cookies. That quiz output fed our AI personalization across email and on-site recs, creating a loop where every new response made the next experience more relevant.

Building the Engine: Personalization at Scale (Data + Models)

At first, our “personalization” was mostly rules: if someone viewed a product twice, show a discount; if they came from email, push bestsellers. It worked, but it didn’t scale. Every new segment meant more manual logic, and the experience still felt generic. So I moved us from rules to models—step by step—so we could personalize faster without guessing.

From Rules to Models (Without Overbuilding)

I started simple with collaborative filtering: “people like you also bought…” based on real purchase patterns. Then I added a lightweight content-based layer so recommendations could still work when we had limited history (new products, new visitors). Finally, we combined both into a hybrid model that balanced similarity and behavior.

- Collaborative filtering: learns from group behavior (what similar customers buy).

- Content-based: learns from product attributes (category, use case, price range).

- Hybrid: blends both so we reduce cold-start issues and keep relevance high.

First-Party Data Became the Backbone

To make AI personalization reliable, I focused on first-party data we could trust and explain. We didn’t need “big data.” We needed clean data tied to real intent.

| Data Type | Examples We Used | How It Helped |

| Purchase history | Items, frequency, bundles | Improved repeat and cross-sell recommendations |

| Zero-party data | Quiz answers, preferences | Made personalization accurate from session one |

| In-session signals | Clicks, scroll depth, time on page | Adjusted messaging in real time |

Orchestration Over Perfect Predictions

I learned quickly that the best model isn’t always the best outcome. I prioritized orchestration—routing the right message at the right time using AI-driven rules. For example:

- If quiz intent is high, show a tailored bundle.

- If browsing is broad, recommend categories, not products.

- If cart hesitation appears, trigger reassurance (shipping, returns), not discounts.

“We didn’t win by predicting everything. We won by delivering the next best step.”

Privacy-First by Design

Because AI depends on data, I built guardrails early: limited retention windows, clear consent, and a strong bias toward zero-party inputs. We stored only what we needed, for only as long as we needed it, and we made opt-outs simple. That trust made customers more willing to share preferences—fueling better personalization without crossing lines.

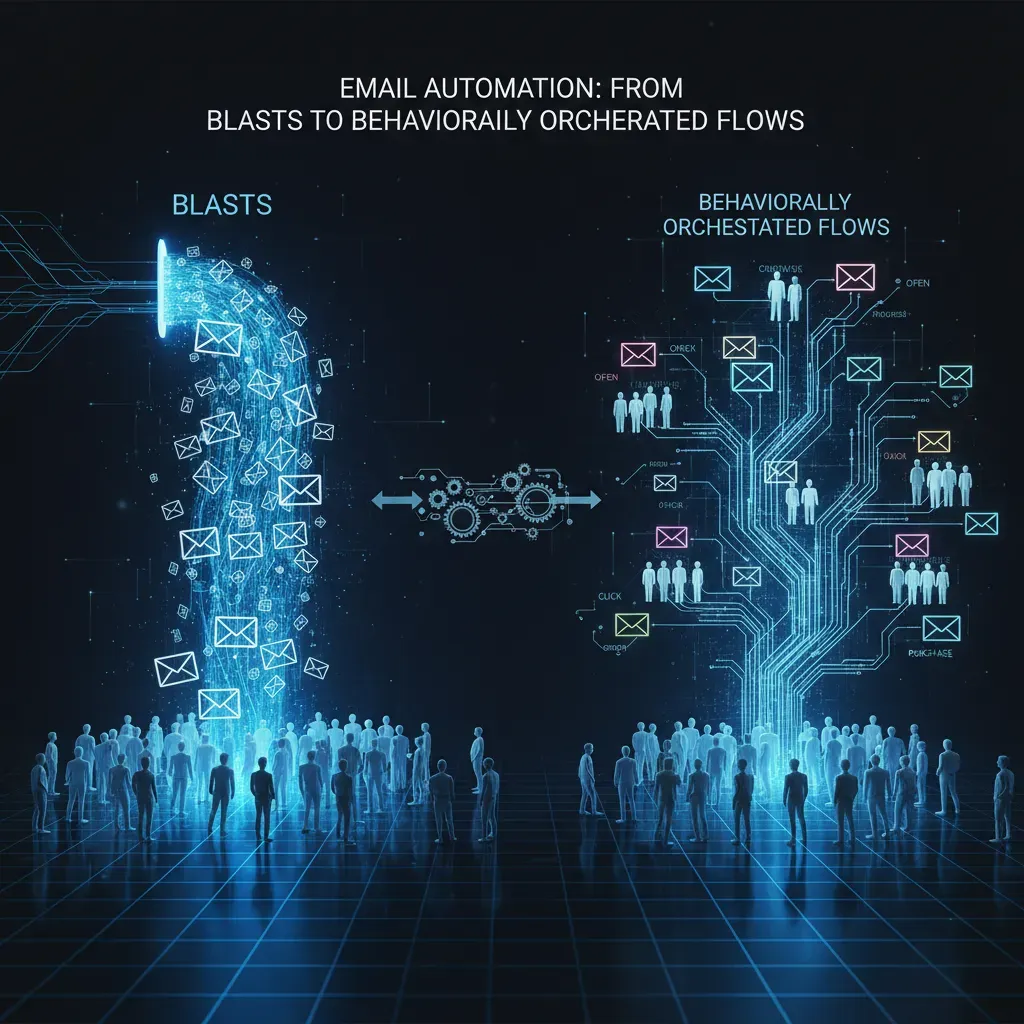

Email Automation: From Blasts to Behaviorally Orchestrated Flows

Before we leaned into AI, our email program was mostly “batch and blast.” We would pick a segment, write one message, and send it to everyone at once. It worked sometimes, but it also created fatigue. The big shift happened when we reworked email into behaviorally triggered flows that responded to what people actually did on our site.

Turning actions into automated journeys

We started with three core flows that covered most buying intent:

- Browse abandonment: when someone viewed a product (or category) but left without adding to cart.

- Cart recovery: when someone added items but didn’t check out.

- Lifecycle nudges: welcome, post-purchase education, replenishment reminders, and win-back.

Instead of one generic message, each flow had multiple branches. Timing mattered too. A browse email could go out within hours, while a win-back might wait days. This is where AI personalization started to feel real, not theoretical.

AI picked the “best next email” details

We didn’t just automate sending. We let AI decide which template, subject line, and product block to show based on predicted intent. For example, if the model saw “high intent, low confidence,” it might choose a template with reviews and a simple guarantee. If it saw “price sensitive,” it might highlight bundles or lower-cost alternatives.

| Signal | What AI inferred | What email changed |

| Repeated product views | Strong interest | More social proof + urgency |

| Category browsing | Still exploring | Comparison-style product blocks |

| Cart + exit | Checkout friction | Simpler CTA + support link |

Automation scaled fast and drove outsized gains

Once these flows were live, they scaled without extra work. That’s what surprised me: automated emails produced a disproportionate share of the conversion lift because they hit at the exact moment someone was deciding.

Some of our “imperfect” subject lines still beat the polished ones. AI helped us target timing and content, but human creativity still made the message feel alive.

Product Recommendations: Tripling Revenue (and Why We Aren’t Done)

When I say AI personalization changed our growth curve, product recommendations are the clearest proof. We didn’t start with anything fancy. Our first tests were simple widgets like “People also bought” and “Frequently bought together”. They were easy to ship, easy to measure, and they gave us quick wins—mostly because they reduced decision fatigue at the exact moment a shopper was ready to act.

From basic widgets to session-based intelligence

After those early tests, we moved to context-aware, session-based recommendations. Instead of only using past purchases, the model looked at what a visitor was doing right now: the category they came from, the filters they used, the price range they hovered around, and even how fast they were browsing. That shift mattered because it made the recommendations feel less like a generic upsell and more like a helpful assistant.

- Simple recs: “People also bought” based on historical patterns

- Smarter recs: “Recommended for you” based on live session signals

- Context recs: bundles and add-ons matched to the current product and intent

Why the numbers jumped

In comparable studies, smart recommendations more than doubled conversion rates and boosted average order value by ~50%. Our internal results tracked in the same direction, and in a few categories we saw revenue per visitor climb so fast it felt unreal. The key was relevance: the closer the recommendation matched the shopper’s intent, the less friction we created.

I still remember one launch where a single recommender block increased add-to-cart in 48 hours. It was a small UI change, but it compounded across thousands of sessions.

Ongoing tuning: the “vibe” matters too

We’re also learning that personalization isn’t only about what we recommend—it’s about how it’s presented. We monitor “vibe” personalization: tone, imagery, and layout choices that match the device and segment. For example, mobile shoppers often respond better to fewer, tighter options, while desktop users explore more.

| What we tune | Why it helps |

| Tone and microcopy | Builds trust and reduces hesitation |

| Imagery style | Makes recommendations feel consistent with the page |

| Widget density | Prevents overload, especially on mobile |

Customer Segmentation, Churn Prediction, and Loyalty Signals

Once our AI personalization started lifting conversions, I realized we were still missing a big piece: retention. So I layered predictive segmentation on top of our usual audience groups. Instead of only sorting people by basic traits (like location or device), we used AI to spot patterns in behavior—what they viewed, how fast they returned, and where they dropped off. This helped us flag high-intent buyers and churn risks early, so we could intervene before the moment passed.

Predictive Segmentation: Finding High-Intent and At-Risk Users

Our AI model created segments that changed in real time. Someone could move from “browsing” to “ready to buy” after a few key actions. Another person could quietly drift into a churn-risk segment after a week of no activity.

- High-intent buyers: repeated product views, cart activity, pricing page visits, fast return sessions

- Churn risks: fewer sessions over time, email fatigue, abandoned carts without return, support issues

“Segmentation stopped being a static label and became a living signal we could act on.”

Churn Prediction and Win-Back Flows That Protected LTV

Churn risk prediction changed how I approached messaging. Instead of sending the same follow-ups to everyone, we built targeted win-back flows. If the AI flagged a customer as likely to leave, we triggered a softer, more helpful sequence—often with a reminder of value, not just a discount.

Here’s a simplified version of how we treated churn scoring:

if churn_score > 0.70: trigger_winback_flow()

These win-back flows preserved LTV because we reached people earlier, when they still had some intent left. It also reduced wasted offers on customers who were going to come back anyway.

Loyalty Signals: VIP Experiences and Exclusive Offers

We also tracked loyalty signals like repeat purchases and high referral activity. When the AI detected strong loyalty, we tested VIP experiences: early access to new items, exclusive bundles, and priority support. This wasn’t about pushing harder—it was about rewarding the right people in a personal way.

| Signal | What We Did |

| Repeat purchases | VIP bundles and reorder reminders |

| Referrals | Exclusive offers and thank-you perks |

This is where AI personalization became more than a conversion tool. It influenced long-term retention, not just immediate sales.

Results, Lessons, and a Privacy-conscious Roadmap

What changed after six months

After six months of steady, iterative work, our AI personalization program delivered the result we were aiming for: a 180% lift in overall conversion rate. That number did not come from one “big launch.” It came from many small improvements stacked together—cleaner audience signals, better timing, and more relevant messages across key pages. We also saw fewer wasted clicks because visitors were guided to the right next step faster, instead of being shown the same generic experience.

The lesson we keep repeating

The biggest lesson I learned is simple: iterate fast, measure ruthlessly, and keep a privacy-first mindset. AI can suggest what to personalize, but it cannot decide what “good” means for your business. We had to define success clearly, track it every week, and be honest when a test did not work. Some ideas that sounded smart in meetings failed in real traffic. When that happened, we rolled back quickly, kept what performed, and moved on. This tight loop—test, learn, adjust—was the real engine behind the conversion lift.

The trade-offs we accepted

We did make trade-offs. We sacrificed some speed to build stronger consent flows and clearer data retention policies. That meant fewer shortcuts with tracking, more time spent reviewing what data we truly needed, and more effort to explain choices in plain language. In the short term, it slowed down experimentation. In the long term, it reduced risk, improved trust, and made our AI personalization more sustainable. I would make the same choice again, because personalization without trust is fragile.

A privacy-conscious roadmap from here

Next, we plan to invest more in real-time orchestration so experiences can adapt in the moment, not hours later. We will also expand zero-party signals—information people choose to share, like preferences and goals—because it is both useful and respectful. Finally, we will run controlled uplift tests at scale to separate real gains from noise and seasonality. If there is one takeaway from our journey, it is that AI works best when it is guided by disciplined measurement and grounded in privacy from day one.

TL;DR: We increased conversions by 180% using AI personalization: targeted email automation, dynamic product recommendations, first-party data collection, and real-time orchestration—balanced with privacy-first practices.