I didn’t “fall in love” with AI in marketing because it wrote a cute headline. I fell for it the day it stopped my team from playing spreadsheet ping-pong at 10:47 p.m. before a launch. We were trying to decide: which audience gets the new offer, which channel goes first, and whether to send now or in the morning. The old way was a meeting. The new way was a decision engine that made a call, showed its reasoning, and let us override it—like a calm co-pilot who doesn’t need coffee. This post is my attempt to separate the real results from the theater: where AI decision intelligence marketing genuinely changes ops, where it creates new messes (hello, authenticity), and what I’m betting on for AI marketing predictions 2026.

1) The night AI finally saved my launch calendar

It was 10:47 p.m., and my launch calendar was about to break. A partner asked for a last-minute change: new offer, different channel mix, and a tighter send-time window. Before, that kind of decision meant two hours of Slack pings, spreadsheet checks, and “can you approve this?” loops. That night, I ran it through our AI decision intelligence marketing setup and had a clear recommendation in minutes. I still made the call—but I didn’t have to rebuild the world to make it.

“Speed is the new creative advantage—if you can execute in hours instead of days, you get more at-bats with the same budget.” — Ann Handley

What changed in ops terms: fewer handoffs, fewer approvals, faster execution speed

The source theme—Marketing Operations Revolutionized by AI: Real Results—matches what I felt in that moment: execution speed became the main outcome. Vendors keep reporting it, and I get why. When AI handles the setup logic and checks, marketers reclaim hours that used to disappear into manual work.

- Fewer handoffs: less waiting for someone to pull reports or QA segments.

- Fewer approvals: guardrails and rules reduce “just in case” meetings.

- Faster execution speed: decisions move from “tomorrow morning” to “right now.”

What I mean by AI decision intelligence marketing (not content generation)

For me, AI decision intelligence marketing is not “write me an email.” It’s a system that weighs signals (performance, audience behavior, constraints, inventory, fatigue) and suggests the best next action—then supports AI-driven decisions automation so the change can be executed safely.

Before vs after: from weekly cycles to Real-time optimization marketing

Before AI, our marketing ops looked like: manual setup, static journeys, and a weekly optimization cycle. Now, Real-time optimization marketing is closer to moment-by-moment—each interaction refines the next one continuously, instead of waiting for a fixed review window.

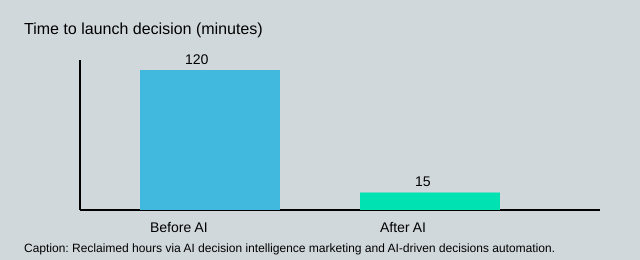

| Ops snapshot | Before | After |

|---|---|---|

| Time to decide offer/channel/send-time | 120 min | 15 min |

| Optimization cadence | Weekly cycle | Moment-by-moment (real-time) |

Small tangent: deleting our recurring “launch status” meeting felt oddly emotional. Not because I hate meetings, but because it proved the process had changed—not just the tools.

2) Data connecting marketing strategy (and my messy middle)

Data connecting marketing strategy is the bridge between what we say we want (growth, retention, efficiency) and what we actually do every day in campaigns. When I rely on gut feel, my decisions change based on mood, pressure, or the last Slack message. When I connect strategy to data, those choices become repeatable—and easier to improve.

“Without a strategy, data is just noise. With a strategy, data becomes a compass.” — Avinash Kaushik

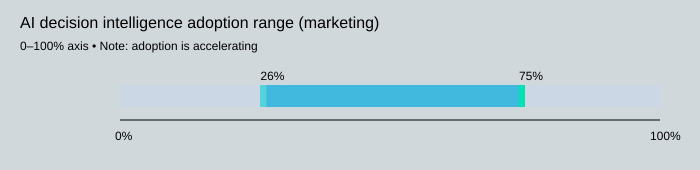

In the source material (“Marketing Operations Revolutionized by AI: Real Results”), the big shift is that AI doesn’t replace strategy—it forces it to be specific. Adoption is already real: between 26% and 75% of customers are adopting AI decision intelligence in marketing, and it’s accelerating fast. The most common AI-driven decisions are practical, not flashy: audience selection, channel routing, send-time optimization, journey progression, creative optimization, and automated A/B testing.

Practical inputs I map before I automate

- Audience signals: intent, engagement, lifecycle stage, CRM attributes (fuel for AI-driven audience selection).

- Channel performance: cost, conversion rate, deliverability, and response by segment (enables Channel routing send-time optimization).

- Send-time history: open/click/conversion patterns by day and hour.

- Journey rules: entry criteria, suppression logic, frequency caps, and progression triggers (supports journey progression).

Confession: I used to hoard dashboards. I thought more charts meant more control. AI forced me to name what I actually care about: the decisions. If I can’t state the decision clearly, the model can’t help—and neither can my team.

Mini playbook: the first 3 decisions I automate

- AI-driven audience selection: define the outcome (trial starts, renewals), then let the model rank who is most likely to act.

- Channel routing: set guardrails (budget, compliance), then route each person to the best channel based on response.

- Send-time optimization: personalize timing using historical behavior, not a single “best time to send.”

The engine behind all of this is Predictive modeling continuous learning: every send, click, and conversion becomes feedback that improves the next decision. One “boring but life-saving” prerequisite: measurement standardization social media (consistent UTMs, naming, and attribution rules), so the learning isn’t built on messy inputs.

| Metric / Decision Area | Data |

|---|---|

| AI decision intelligence adoption (G2 insight) | 26%–75% of customers (accelerating) |

| Most adopted AI-driven decisions | Audience selection; Channel routing; Send-time optimization; Journey progression; Creative optimization; Automated A/B testing |

3) AI agents marketing scale: when ‘set it and forget it’ becomes real (and scary)

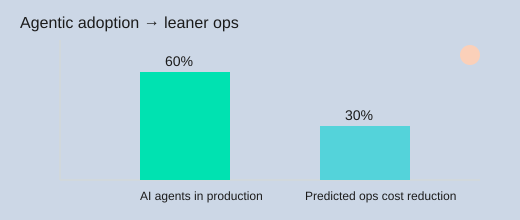

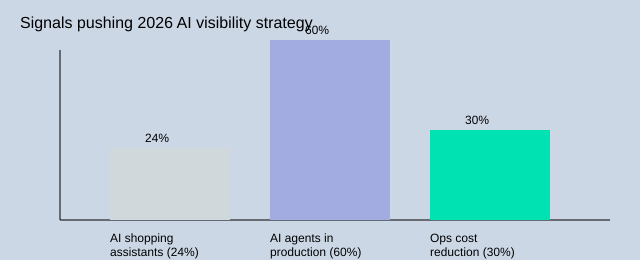

When I say AI agents marketing scale, I’m not talking about a tool that suggests ideas. I mean delegated work: an agent that can take a goal, make choices, and execute tasks across systems. In Marketing Operations Revolutionized by AI: Real Results, the shift is clear—AI decision intelligence is moving from “experiment-only” to being embedded into core marketing workflows for all teams. Nearly 60% of enterprises now have AI agents in production, and aggressive adopters are predicted to drive Marketing operational costs reduction of about 30%.

“Automation applied to an inefficient operation will magnify the inefficiency.” — Bill Gates

Autonomous execution marketing workflows: brief → build → launch → learn

This is how I explain Autonomous execution marketing workflows in plain language:

- Brief: I set guardrails—goal, audience, budget caps, exclusions, and success metrics.

- Build: The agent drafts variants (copy, subject lines, landing blocks), tags assets, and sets tracking.

- Launch: Autonomous campaign execution AI pushes to channels, schedules sends, and allocates spend.

- Learn: It reads results, updates targeting, and ships agentic optimization recommendations—then repeats.

What “autonomous execution AI systems” looks like in a normal week

Here’s the scenario I use with stakeholders: I’m in a budget meeting, and an agent runs 50 micro-experiments/week (illustrative). It tests small changes—CTA order, offer framing, audience splits—then rolls winners forward. That’s the real power of Autonomous execution AI systems: throughput without adding headcount.

Where I draw the line: approvals, brand voice, and kill switches

- Approvals: Anything that changes claims, pricing, or compliance needs human sign-off.

- Brand voice: I lock tone rules and “never say” lists; I still manually check the first send after a new model update.

- Kill switches: Hard stops for spend spikes, complaint rates, or off-brand language—pause first, ask questions second.

| Metric | Value |

|---|---|

| Enterprises with AI agents in production | ~60% |

| Predicted ops cost reduction (aggressive adopters) | 30% |

| Experiment throughput example | 50 micro-experiments/week (illustrative) |

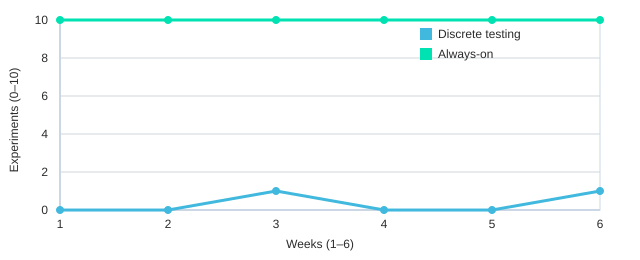

4) Always-on experimentation capabilities (goodbye, quarterly ‘test plans’)

In “Marketing Operations Revolutionized by AI: Real Results,” the shift that hit me hardest was this: Always-on experimentation capabilities aren’t a faster version of classic A/B testing—they’re a different operating model. Instead of filing tickets, waiting for approvals, and launching one test at a time, the Experimentation always-on capability lives inside the decision engine that serves messages, offers, and next-best actions.

From A/B test tickets to embedded decisioning

Classic testing is a discrete workflow: pick a hypothesis, build variants, split traffic, wait, then decide. Always-on flips that. AI proposes hypotheses continuously (based on patterns it sees), and the system allocates traffic dynamically—sending more people to what’s working while still reserving enough traffic to keep learning. That’s where Performance outcomes AI decisioning shows up: faster learning loops, less wasted spend, and fewer “we’ll know next quarter” moments.

Real-time optimization moments (the micro-layer)

What surprised me is how small the optimization units become. Instead of “this month’s landing page test,” we get Real-time optimization moments: subject line, send time, creative angle, offer framing—each interaction refines the next one, continuously. It’s not a fixed cycle anymore; it’s moment-by-moment tuning.

Personal note: our first always-on week

My team’s first week running always-on felt like switching from film cameras to digital. With film, you plan carefully because every shot is expensive. With digital, you still need craft—but you learn instantly and adjust. That immediate feedback changed how we worked day-to-day.

“The biggest risk is not taking any risk… In a world that’s changing really quickly, the only strategy that is guaranteed to fail is not taking risks.” — Mark Zuckerberg

Operational guardrails (so the machine doesn’t run wild)

- Sample sizes: set minimums before declaring winners; avoid “early spike” false positives.

- Holdouts: keep a control group to prove lift, not just movement.

- Pause rules: stop experiments when data quality breaks, costs jump, or brand risk appears.

| Data | Discrete | Always-on |

|---|---|---|

| Experimentation mode | Monthly/quarterly tests | Continuous |

| Optimization timing | Fixed cycles | Real-time optimization moments (trend) |

The payoff is Adaptive journeys customer engagement: better progression logic because every step (open, click, browse, purchase, churn signal) teaches the next step what to do.

5) Generative AI blurs authenticity (and the Svedka moment)

Generative AI blurs authenticity in a way audiences can feel, even if they can’t explain it. In my day-to-day ops work, the “off” signal usually shows up as copy that sounds too smooth, too safe, or oddly generic—like it was optimized for approval, not for people.

“Your brand is what people say about you when you’re not in the room.” — Jeff Bezos

Generative AI authenticity marketing: what feels “off”

The cultural marker for me was Svedka running an AI-generated Super Bowl advertisement. Not as a gotcha—more like a timestamp. If AI can headline the biggest ad stage, then Generative AI creative content is now mainstream, and the authenticity bar just got higher.

My rule: AI is like autotune—useful, but you can hear it when it’s lazy. I once published an AI-polished line that read like a press release. A customer emailed me: “This doesn’t sound like you.” They were right, and it reminded me that Generative AI authenticity marketing is less about tools and more about trust.

Content amplification AI strategies without losing your voice

I still use AI for speed: drafts, variations, summaries, and repurposing. But I keep the “human spine” in place. Practical content ops that work:

- Disclose when appropriate (especially for thought leadership and customer-facing claims).

- Human review for tone, accuracy, and “would I say this out loud?”

- Protect voice with a short style guide and banned phrases list.

- Source notes in the doc so edits don’t erase intent.

Measurement gets weird: data transparency ownership marketing

AI also complicates visibility and efficacy. In zero-click search, people may get answers without visiting your site, so attribution can look “worse” even when impact is real. That’s why Data transparency ownership marketing matters: know what data you own, what platforms summarize, and what your AI tools store or learn from.

| Authenticity risk checklist (illustrative) | Score |

|---|---|

| Disclosure | 0/1 |

| Brand voice | 0–5 |

| Human review | 0/1 |

| Source transparency | 0/1 |

| Example content mix target | Human-led 60%, AI-assisted 40% |

6) Programmatic advertising AI precision + the 2026 visibility scramble

Programmatic advertising AI precision: real-time decisions that actually learn

In 2026, I’m treating Programmatic advertising AI precision as the default, not the upgrade. The shift is simple: platforms now use performance data to automatically decide what to buy, where to place it, and when to stop—often in real time. This is Programmatic advertising AI buying at speed: bids, placements, and creative rotation adjust based on what’s working right now, not what worked last week. It matches what I’m seeing in “Marketing Operations Revolutionized by AI: Real Results”—less manual tuning, more system-led optimization, and clearer accountability.

AI visibility strategy brands: discoverable to agents, not just people

The scramble is visibility. If 60% of enterprises already have AI agents in production, then “search” is no longer only a human activity. Brands must accelerate their AI visibility strategy growth plans so they’re chosen inside agentic transactions—when an assistant compares options, checks availability, and recommends a purchase. That’s why AI marketing predictions 2026 feel urgent: being “findable” now includes being readable by machines.

| Signal | Value | Why it matters in 2026 |

|---|---|---|

| Consumers using AI shopping assistants | 24% | Delegated purchase support is becoming normal |

| Enterprises with AI agents in production | 60% | More decisions happen through agents, not browsers |

| Year focus | 2026 | Readiness planning for visibility and automation |

Signals pushing 2026 AI visibility strategy

The Conversational AI marketing layer becomes the control panel

As a Conversational AI marketing layer becomes the interface, I can ask, “Which placements drove profitable carts in the last 6 hours?” and trigger actions—pause, shift budget, generate new variants—without digging through five dashboards. That’s the operational change I’m planning for.

What I’m changing for 2026 readiness

I’m tightening product feeds, adding clean schema, and expanding creative variations so the system has more to test. I’m also rebuilding measurement around incrementality and profit, not clicks, because real-time buying only helps if the goal is correct. With 24% already using AI shopping assistants, my AI visibility strategy brands work now includes making offers, inventory, and policies easy for agents to verify. As Tom Fishburne said,

“The best marketing doesn’t feel like marketing.” — Tom Fishburne

In 2026, that often means the assistant chooses you before the customer even sees an ad.

TL;DR: AI is moving from “experiments” to core marketing operations: decision intelligence + AI agents + autonomous execution can speed work, cut ops costs (up to 30% for aggressive adopters), and enable always-on experimentation—if you protect authenticity, data ownership, and measurement.