I remember walking into a weekly ops review where an eager data scientist announced a promising ML pilot — and the room politely applauded before asking the only question that mattered: ‘How does this move the business needle?’ That moment taught me the hard lesson that AI without operational alignment is an expensive experiment. In this post I’ll walk you, from the seat of a practitioner-turned-advisor, through a pragmatic framework for an AI-first operations strategy that leaders can actually act on.

Business Alignment First

When I build an AI-First Operations Strategy, I start with one rule: business outcomes lead, technology follows. If an AI project cannot clearly tie to revenue growth, cost reduction, customer experience, or risk mitigation, it becomes a science project. Alignment keeps teams focused, helps leaders fund the right work, and makes success measurable.

“If we can’t name the KPI, we can’t call it a strategy.”

Start with value levers, not models

I ask leaders to brainstorm three diverse value levers and map one pilot to each. This prevents over-investing in a single type of win.

- Revenue growth: AI-assisted lead scoring to prioritize high-intent prospects.

- Cost reduction: Intelligent document processing to cut manual invoice handling.

- CX improvement: Support triage that routes tickets faster and reduces wait time.

Each pilot should have a simple “why now” statement and a measurable target, like +5% conversion, -20% handling time, or -15% average response time.

Prioritize with a value-vs-feasibility matrix

To choose what to do first, I use a scoring template with exec sponsors. It’s basic, but it forces clarity.

| Criteria | Score (1–5) | Notes |

| Business Value | 1–5 | Revenue, cost, CX, or risk impact |

| Feasibility | 1–5 | Data readiness, integration effort, change impact |

| Time-to-Impact | 1–5 | How fast we can prove results |

I then rank pilots by a simple formula: (Value + Time-to-Impact) – Feasibility Risk. The exact math matters less than the shared decision.

Tactical tip: run a 0–3 month discovery sprint

Before building, I run a short discovery sprint focused on stakeholder interviews and KPI alignment. The output is a one-page pilot charter: owner, baseline metric, target metric, data sources, and success criteria. This is how AI work stays tied to real operational outcomes.

Unified Data Foundation

In every AI-First Operations Strategy I have helped shape, data readiness has been the number-one success factor. Before I worry about model choice, I focus on four basics: quality (is it correct?), accessibility (can teams use it?), integration (does it connect across systems?), and governance (is it controlled and trusted?). If any one of these is weak, AI results become unstable, slow, or hard to scale.

Why documentation matters (a lesson I learned the hard way)

I once saw a promising forecasting model fail in production, even though it looked great in testing. The root cause was not the algorithm. It was the finance data pipeline: it had undocumented joins between tables, and those joins changed how revenue was calculated after a system update. Nobody could explain the logic quickly, so we lost weeks rebuilding trust in the numbers. That experience made me treat documentation as part of the product, not a “nice to have.”

“If you can’t explain where a number came from, you can’t scale AI on top of it.”

Set standards that make data usable across operations

To build a unified foundation, I set clear standards early so every team contributes data in a consistent way:

- Common data catalog with owners, definitions, and lineage

- Master data for customers, products, suppliers, locations, and chart of accounts

- API-based integration to core systems like ERP, CRM, and supply chain tools

- Governance rules for access, retention, privacy, and change control

Plan a 3–9 month modernization phase

I typically plan a 3–9 month pipeline modernization phase to unblock model training and deployment. The timeline depends on system sprawl and data debt, but the work is similar: fix broken pipelines, standardize key fields, automate validation checks, and document transformations.

| Focus Area | What I Standardize |

| Quality | Validation rules, anomaly checks, and reconciliations |

| Integration | APIs, event streams, and shared identifiers |

| Governance | Roles, approvals, and audit trails |

Use Case Identification

When I build an AI-First Operations Strategy, I start by picking use cases that are both valuable and realistic. The goal is not to “do AI,” but to remove friction from daily work in a way we can measure. I use a simple scoring approach so teams can agree on priorities without long debates.

A practical way to spot quick wins

I map each idea across four axes: value, data readiness, complexity, and time-to-impact. High-value, data-ready, low-complexity work that shows results fast usually makes the best first pilot.

| Axis | What I look for |

| Value | Cost saved, time saved, risk reduced, or revenue protected |

| Data readiness | Clean inputs, clear owners, and access approved |

| Complexity | Few integrations, stable process, low exception rate |

| Time-to-impact | Results in weeks, not quarters |

Evaluate feasibility with the right people in the room

I bring a cross-functional group together—product, operations, legal, and data—to pressure-test each use case. Ops confirms the workflow details, data checks availability and quality, product aligns on user impact, and legal flags privacy, retention, and regulatory risk early. This avoids building something that later gets blocked.

Rule I follow: if legal or data ownership is unclear, it is not a “quick win.”

A real example from my work

In one organization, we piloted HR workflow automation for employee onboarding requests. By standardizing intake, auto-routing approvals, and generating draft responses, we reduced processing time by 40%. It was a clear win because the baseline was known, the data lived in one system, and success metrics were simple.

Don’t chase novelty

I avoid starting with agentic or experimental systems. Instead, I prioritize composable, repeatable use cases—like ticket triage, document classification, knowledge search, and form automation—where we can reuse components and controls across teams.

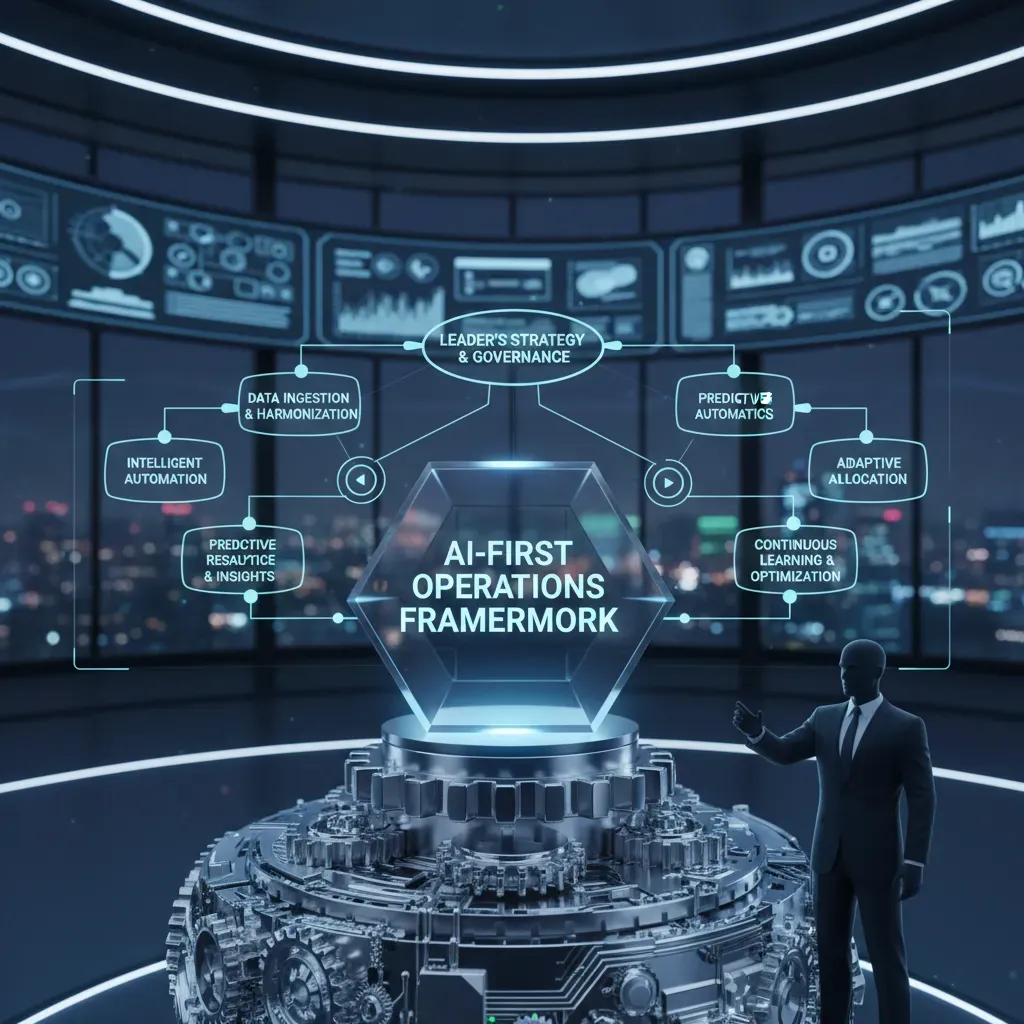

Enterprise AI Architecture

When I design an AI-First Operations Strategy, I treat architecture as the guardrails that let AI scale safely. If the foundation is weak, even a great model becomes hard to deploy, monitor, and improve.

Architect for scale

I start with an API-first approach so every model capability is a service the business can reuse. I prefer modular services (separate data access, feature logic, model inference, and orchestration) because it reduces coupling and speeds up change.

- API-first design: consistent endpoints for inference, feedback, and audit logs

- Modular services: swap models without rewriting upstream systems

- Cloud-native infrastructure: containers, autoscaling, and managed storage for reliability

Adopt modern MLOps

I use MLOps to make models behave like production software. That means repeatable releases, clear approvals, and fast rollback when something breaks.

- CI/CD for models: automated tests for data quality, bias checks, and performance gates

- Monitoring: latency, error rates, and prediction distributions in real time

- Retraining pipelines: scheduled or trigger-based retraining with versioned datasets

- Deployment procedures: canary releases and A/B tests before full rollout

Decide build vs buy (a simple rubric)

I avoid defaulting to “build everything.” Instead, I score options using a quick rubric:

| Factor | Build when… | Buy when… |

| Data advantage | You have unique data | Data is common |

| Speed | Timeline is flexible | You need value fast |

| Risk | You can own compliance | Vendor has proven controls |

| Cost | Long-term scale is large | Usage is uncertain |

Scaling production models: the hard parts

In real operations, I plan early for drift, SLAs, and cost. Drift monitoring catches when inputs change; SLAs define acceptable latency and accuracy; and cost controls prevent runaway spend.

“A model in production is a living system—monitor it like one.”

Governance, Ethics & Operationalization

When I build an AI-First Operations Strategy, I treat governance as the “operating system” that keeps speed from turning into risk. If we want AI in daily workflows, we need clear rules for how models are selected, trained, deployed, and monitored.

Build an AI governance framework

I start with simple, written policies that everyone can follow. These policies cover risk levels, bias checks, data privacy, and compliance requirements. I also define who approves what, so decisions do not get stuck or made informally.

- Risk tiers: low-risk internal tools vs. high-risk customer-facing decisions

- Bias assessment: what we test, how we test, and what “pass” means

- Data privacy: allowed data types, retention rules, and access controls

- Compliance: mapping to relevant laws, contracts, and industry standards

Ethics guardrails and review cadence

Ethics cannot be a one-time checklist. I set guardrails that are easy to remember and enforce, then I schedule recurring reviews. I also bring legal and compliance into design reviews early, not at the end, so we avoid rework.

My rule: if an AI system can impact a person’s access, cost, or opportunity, it must have stronger review and clearer explanations.

Operational excellence for AI systems

To operationalize AI, I run it like any other production service. That means clear SLAs, runbooks, and incident response plans for model failures and data issues (like drift, missing fields, or pipeline breaks).

| Operational item | What I define |

| SLA | Uptime, latency, quality targets, escalation path |

| Runbook | Step-by-step checks, rollback steps, owner on-call |

| Incident response | Severity levels, communication plan, post-incident review |

Agentic AI planning (agent swarms)

If I adopt agentic worker models, I add governance for “agent swarms”: permission boundaries, tool access rules, spend limits, and audit logs. I also require a human-in-the-loop step for high-impact actions, so autonomy stays controlled.

Execution Roadmap & Measurement

A realistic 12–18 month roadmap

When I build an AI-First Operations Strategy, I plan for steady wins, not a “big bang.” A simple 12–18 month roadmap keeps leaders aligned and reduces rework.

- Discovery (0–3 months): pick 2–3 high-value workflows, map current steps, confirm data access, and define success metrics. I also run small pilots to prove feasibility.

- Pipeline Modernization (3–9 months): fix the plumbing—data quality checks, feature pipelines, model monitoring, and secure deployment paths. This is where most delays happen if skipped.

- Scaling (9–18 months): expand to more teams, standardize templates, and automate governance so each new use case ships faster than the last.

Execution planning: checkpoints and gates

I use checkpoints with clear go/no-go gates and sponsor reviews. Typical gates include: data readiness, risk review, pilot results, and production readiness. If a gate fails, we pause and fix the root cause instead of pushing forward.

Here’s a sample RACI I’ve used:

| Activity | R | A | C | I |

| Use case selection | Ops Lead | Exec Sponsor | Finance, IT | Teams |

| Data access & quality | Data Eng | CIO/IT | Security | Ops |

| Model build & eval | ML Lead | Product Owner | Risk/Legal | Sponsor |

| Production release | MLOps | CIO/IT | Ops Lead | All |

KPIs that keep the strategy honest

- Business outcomes: cycle time, error rate, revenue protected, cost saved

- Model performance: accuracy, drift, hallucination rate (for GenAI)

- Data quality: freshness, completeness, defect rate

- Time-to-deployment: idea-to-prod days, release frequency

- Cost-per-inference: unit cost, peak-load cost, tooling overhead

Wild card: agentic AI “workers”

If agentic AI boosts throughput by 30%, I still budget for rising governance costs: more audits, stronger access controls, and better logging. I treat that trade-off as a measurable KPI, not a surprise.

A Human-Centered AI Operations Playbook

When I step back and look at an AI-First Operations Strategy, I see a simple path that leaders can repeat. I start with business alignment, because AI only matters when it supports clear goals like faster cycle time, fewer errors, or better service. Next, I secure the data foundation so teams can trust what the models learn from and what they produce. Then I prioritize use cases based on value and risk, instead of chasing the newest tool. After that, I build scalable architecture so pilots can grow into real operations without constant rework. Finally, I govern and measure, because what we do not track will drift, and what we do not govern will break trust.

My closing note is this: I treat AI like a new class of employee. It needs standards, training data it is allowed to use, and clear job boundaries. It also needs regular reviews for quality, bias, security, and cost. And just like people move roles, AI systems need a retirement path, so old models and agents do not linger in workflows after they stop being safe or useful.

If you want a practical next step, I recommend a short discovery sprint this quarter. In two to four weeks, map your top operational pain points, check data readiness, and identify constraints from legal, security, and compliance. Then report back with three prioritized pilots that have owners, success metrics, and a plan to scale if they work.

Here is my “if we…” story. If we deploy agentic AI to handle intake, routing, and first-draft responses across support and back office work, we might reduce manual effort by 25% within a quarter. But we should expect a tradeoff: a 10% increase in governance budget for monitoring, audit logs, access controls, and human review. In my experience, that is a fair exchange, because the goal is not automation at any cost—it is reliable performance with accountability.

AI-first operations succeed when people stay in control, outcomes stay measurable, and trust stays intact.

Start with business alignment, secure a unified data foundation, pick high-value use cases, build scalable AI architecture, and operationalize with MLOps and governance; expect a 12–18 month roadmap.