I still remember the Thursday when our SDRs spent an entire morning wrestling with a spreadsheet of lukewarm prospects — two hours per lead, burned coffee, and a mountain of guesswork. That day I decided to stop treating lead qualification like a ritual and start treating it like a data problem. In this post I’ll walk you through how I moved from manual hunches to AI-driven signals that qualify leads 10x faster, what tools I used (and why), and the measurable wins that followed.

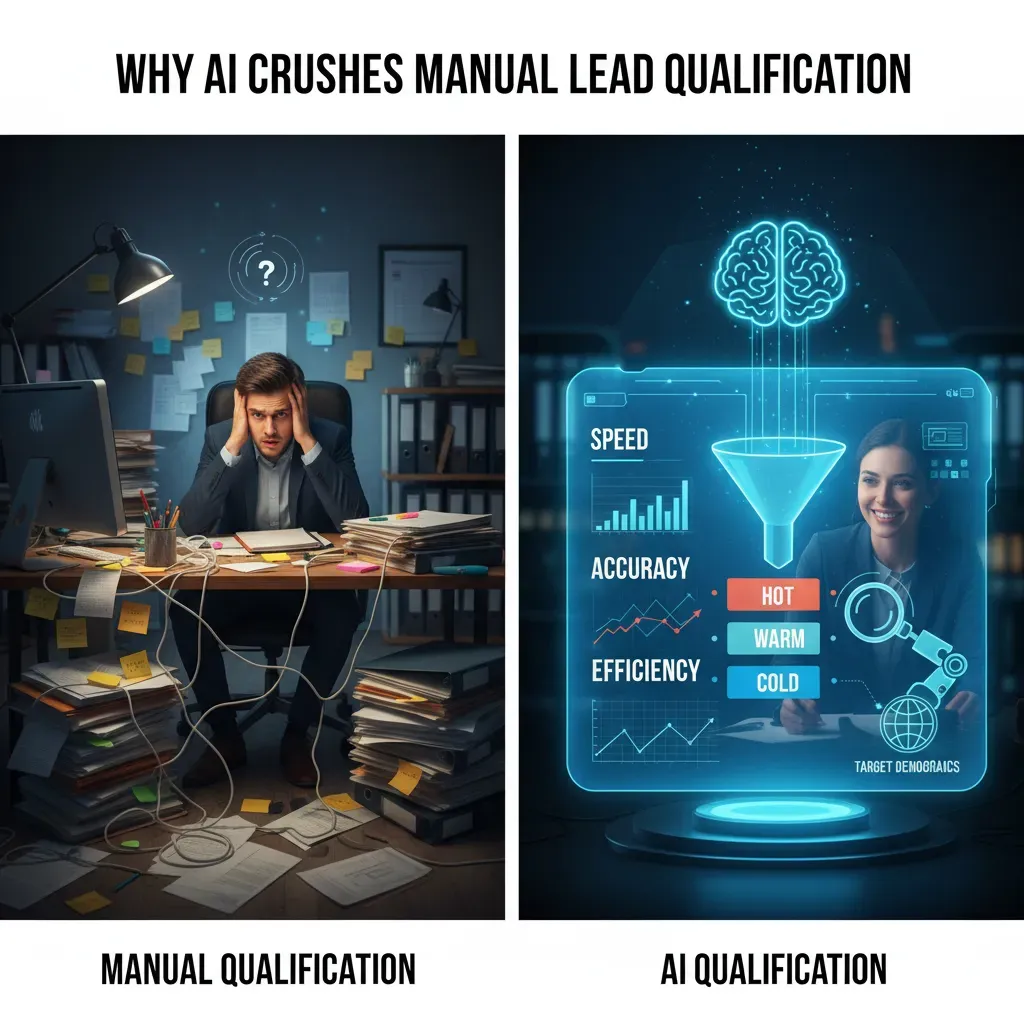

Why AI Crushes Manual Lead Qualification

My manual scoring agony (and the moment I knew it had to change)

I used to qualify leads the “responsible” way: open the CRM, read notes, check LinkedIn, scan the website, look at email replies, then assign a score in a spreadsheet. It sounded organized, but it felt like slow pain. I’d spend up to two hours on a single prospect when the data was messy or spread across tools. Worse, after all that effort, I still had that nagging doubt: Did I miss something important?

“I wasn’t losing leads because we lacked effort. I was losing them because manual methods can’t keep up with real buying signals.”

From 2 hours to 2 minutes per prospect in real workflows

When I started using AI for lead qualification, the biggest shock was speed. Instead of hunting for clues, I could feed the system the same inputs we already had—CRM fields, email engagement, web visits, firmographic data—and get a clear recommendation fast. In a normal workflow, what used to take me 2 hours became closer to 2 minutes per prospect because AI can process and compare patterns instantly.

It doesn’t just “score” faster; it also explains why a lead is hot, warm, or cold, so I’m not blindly trusting a number.

Static rules vs dynamic signal analysis

Manual scoring usually relies on a small set of rules. I’d typically use 5–10 signals, like job title, company size, industry, and whether they booked a demo. The problem is those rules are static. They don’t adapt when your market shifts or when buyer behavior changes.

AI lead qualification works differently. It can evaluate 50+ signals at once and weigh them based on what actually correlates with conversion. That includes:

- Intent signals (repeat visits to pricing pages, comparison pages)

- Engagement depth (time on site, return frequency, content consumed)

- Fit signals (tech stack, growth stage, hiring trends)

- Response patterns (email timing, sentiment, objections)

Top-line benefits I can measure

Once AI replaced my manual process, the results were hard to ignore:

- 40% more qualified opportunities because fewer good leads slipped through

- 40–60% accuracy in identifying sales-ready prospects vs 15–25% with manual scoring

- More consistent decisions across the team (less “gut feel” scoring)

Key Techniques: Predictive Scoring, Intent Detection & ML

Predictive lead scoring (and how ML weights signals)

When I qualify leads with AI, I start with predictive scoring. Instead of guessing based on one field like “Company Size,” machine learning looks at many signals at once and assigns a score that reflects how likely a lead is to convert. The key is that the model weights signals differently based on what has worked in the past.

For example, a pricing-page visit might matter more than a newsletter signup, and a repeat visit might matter more than a single click. ML learns these patterns from historical wins and losses, then updates as new data comes in.

- Firmographic signals: industry, company size, location

- Engagement signals: pages viewed, time on site, return visits

- Fit + timing signals: job role, tech stack, recent activity spikes

Intent detection beats simple form fills

Intent detection is the difference between “someone filled a form” and “someone is actively trying to solve the problem I sell.” Form fills can be low-quality because people download content out of curiosity. Intent detection uses behavior and context to spot buying signals, like comparing plans, reading implementation docs, or searching for integration details.

In my experience, intent signals tell me why a lead is here, not just who they are.

AI can also classify intent from text (chat messages, emails, call notes) using natural language patterns, so I can route leads faster without manual review.

Behavioral analysis and anonymous visitor scoring

Behavioral analysis tracks engagement patterns across sessions. This matters because many high-intent visitors are anonymous at first. AI can still score them using session data, then connect the dots once they identify themselves.

- Track events (views, clicks, scroll depth, repeat visits)

- Detect patterns (research mode vs. buying mode)

- Assign a score and trigger actions (alerts, outreach, nurture)

I often map key actions into a simple rules layer too, like:

if pricing_visits >= 2 and demo_click == true then score += 25

How tools handle signals (Leadspicker, Origami Agents, SynthFlow)

Leadspicker helps me capture and enrich lead signals, then prioritize outreach based on activity and fit. Origami Agents can automate qualification steps by interpreting intent and routing leads to the right workflow. SynthFlow supports conversational qualification, collecting details while also using engagement cues to decide when a lead is sales-ready.

Integrating AI into Your Stack: CRM, Workflows & Enrichment

Step-by-step: connect predictive scoring to my CRM

To qualify leads 10x faster with AI, I start by putting the score where my team already works: the CRM. My basic setup looks like this:

- Pick the scoring source: my AI scoring tool (or a model in my data warehouse) that outputs a number like 0–100.

- Create CRM fields: AI Score, AI Tier (Hot/Warm/Cold), and Score Updated At.

- Map identifiers: match on email + company domain to avoid duplicates.

- Sync on a schedule: near real-time if possible; otherwise every 15–60 minutes.

- Route high-score leads: assign owners, set priority, and move lifecycle stage automatically.

I keep the first rollout simple: one score, one tier, and one routing rule. Complexity comes later.

Automated workflows: thresholds, alerts, and SDR handoffs

Once the score is in the CRM, I use workflows to remove manual sorting. I define thresholds based on past conversion data, then adjust weekly.

- Hot: score ≥ 80 → assign to SDR queue + create task “Call in 5 minutes.”

- Warm: 50–79 → enroll in email sequence + notify owner in Slack.

- Cold: < 50 → keep in nurture, no SDR time spent.

My rule: alerts should create action, not noise. If an alert doesn’t change behavior, I remove it.

For handoffs, I add a required field like Next Best Action so SDRs see why the lead is hot (pricing page visit, high-fit industry, repeat demo views).

Lead enrichment and attribution: firmographics + behavioral signals

AI works better when records are complete. I enrich leads automatically with:

- Firmographics: industry, employee count, revenue range, tech stack, HQ location.

- Behavioral signals: page depth, return visits, webinar attendance, email clicks, product signups.

- Attribution: first-touch and last-touch source, plus key “assist” events.

I store these as separate fields so the model can learn and my team can filter lists without guessing.

Common pitfalls (and how I staged the rollout)

The biggest issues I hit were dirty data, duplicate leads, and over-routing. I mitigated them by launching in stages:

- Week 1: scoring visible only (no automation).

- Week 2: routing for Hot leads only.

- Week 3: add enrichment + warm workflows.

Conversational AI & Voice Agents: Qualify in Real Time

How conversational AI finds buying intent in natural chats

When I use AI chat on a site or inside a product, I’m not trying to “trap” people with forms. I’m trying to learn intent during a normal conversation. The best conversational AI listens for signals like urgency, budget language, and specific use cases. For example, “We need this live by next month” is a strong timeline cue, and “We’re replacing HubSpot” is a clear switch intent.

I also design questions that feel helpful, not pushy. Instead of “What’s your budget?”, I ask: “Are you looking for a starter plan or something built for a larger team?” That still gives me a budget range without friction.

The role of AI voice agents in real-time qualification and handoff

Voice agents take this further because they qualify while the lead is already engaged. In my deployments, an AI voice agent can answer common questions, confirm fit, and then hand off to a sales rep in the same call or within minutes. The key is real-time routing: if the AI detects high intent, it stops “chatting” and starts “connecting.”

- Captures details: company size, current tool, timeline, and decision role

- Scores intent live and updates the CRM instantly

- Books meetings or warm-transfers to the right rep

Scripts and guardrails that keep it human

I write scripts as flexible blocks, not rigid lines. The AI should sound like a helpful coordinator, not a robot. My guardrails are simple:

- Stay on goal: qualify, route, or schedule—no long detours.

- Ask one question at a time and confirm key facts.

- Safe fallback: if confidence is low, offer a human handoff.

“If I can’t answer that confidently, I can connect you with a specialist—would you like that?”

Handoff triggers and SLA rules I’ve used

These are examples of triggers and service rules that have worked well for me:

| Trigger | Action | SLA |

| Mentions “pricing” + timeline < 30 days | Warm transfer to sales | Connect in < 2 minutes |

| Decision-maker confirmed | Auto-book meeting | Offer 3 slots instantly |

| Enterprise keywords (SSO, SOC 2, procurement) | Route to enterprise rep | First response < 10 minutes |

| Low confidence intent score | Create task + nurture | Follow-up within 24 hours |

I usually store the rules in a simple config so ops can adjust without code:

if intent_score >= 0.80 and timeline_days <= 30 => handoff(“sales_now”)

Measuring Success: Metrics, Dashboards & Common Pitfalls

If I want AI to qualify leads 10x faster, I can’t judge it by “it feels better.” I track clear metrics that show speed and quality, then I watch for the common ways AI can quietly go wrong.

Essential KPIs I track

- Qualification accuracy: How often the AI’s “qualified” decision matches the final outcome (SQL accepted, opportunity created, or closed-won).

- False positive rate: Leads marked as qualified that sales rejects. This is the fastest way to lose trust.

- False negative rate: Good leads the AI downgrades or ignores. This hurts growth and is easy to miss.

- Time-to-handoff: Minutes/hours from first touch to sales-ready handoff. This is where AI should shine.

- Pipeline velocity: How quickly leads move from MQL → SQL → opportunity. I watch stage-to-stage conversion and time-in-stage.

Dashboards & attribution that reflect AI-driven conversions

I build one dashboard that combines marketing, sales, and AI signals. The goal is to connect the AI decision to revenue outcomes, not just activity.

| Dashboard view | What I include |

| Speed | Time-to-handoff, response time, time-in-stage |

| Quality | Accuracy, false positives/negatives, sales acceptance rate |

| Revenue impact | Opportunity rate, win rate, pipeline created, revenue influenced |

For attribution, I tag every lead with an AI_Qualified = true/false flag and store the model version. Then I compare outcomes by channel, segment, and rep team to avoid “AI gets credit for everything.”

Common failure modes (and how I detect them)

- Data drift: Lead behavior changes over time. I monitor weekly shifts in key inputs (industry mix, source mix, intent signals).

- Overfitting: The model looks great in training but fails in real life. I check performance on new time periods, not just random splits.

- Noisy signals: Clicks, form fills, or bot traffic inflate scores. I audit top “qualified” leads and look for patterns like fake domains.

If sales says “these leads are junk,” I treat it as a metric problem first, not a people problem.

A/B testing AI vs manual (and reading lift correctly)

I run a clean test: split similar leads into two groups—manual qualification vs AI—over the same time window. I measure lift on sales acceptance, opportunity rate, and time-to-handoff. If AI is faster but increases false positives, I tighten thresholds or add stronger intent filters before scaling.

Roadmap, Case Study & Next Steps

A simple rollout roadmap: pilot, iterate, scale

When I roll out AI for lead qualification, I do it in phases so the team trusts the results. In week 1, I run a pilot with one channel (usually inbound demo requests) and one small sales pod. The checkpoint is clear: the AI must match human decisions on fit and urgency at least 80% of the time, and it must cut first-pass review time by 50%.

In weeks 2–3, I iterate. I review misclassified leads, refine the scoring rules, and tighten the questions the AI asks. My checkpoint here is quality: fewer “false positives” reaching AEs, and faster handoff to the right stage. By week 4–6, I scale to more sources like webinars, paid search, and outbound replies. The checkpoint becomes business impact: higher meeting-to-opportunity conversion and shorter speed-to-lead.

Case study: from 2 hours to 2 minutes

Imagine a SaaS startup selling workflow software to mid-market teams. Before AI, an SDR spent about 2 hours per lead across research, enrichment, and back-and-forth emails. After adding AI, the system pulled firmographic data, summarized intent signals, and asked two short qualifying questions. The SDR only reviewed the summary and approved the next step. The result: qualification dropped to about 2 minutes per lead, and the team saw 40% more qualified leads in the pipeline because they stopped wasting time on poor-fit accounts.

Vendor and metric alignment (MEDDIC + B2B conversion)

Before I choose a vendor, I make sure it supports my process, not the other way around. I check that it can map fields to MEDDIC (Metrics, Economic buyer, Decision criteria, Decision process, Identify pain, Champion) and report on B2B conversion metrics like lead-to-meeting, meeting-to-opportunity, and opportunity-to-close. I also confirm it can explain why a lead scored high, integrate with my CRM, and keep data clean with clear audit logs.

My closing tips: keep humans in the loop

AI qualifies leads fast, but I never let it run alone. I set a culture rule: AI recommends, people decide for edge cases. I retrain the model on a steady cadence (monthly for fast-moving teams, quarterly for stable ones) and review a sample of decisions every week. The best way to avoid over-automation is to protect the buyer experience: if a prospect shows confusion or high intent, I route them to a human immediately. That balance is how I get speed and trust.

AI lead qualification slashes manual scoring time, increases accuracy and pipeline velocity, and works best when combined with CRM integration, predictive scoring, and conversational agents.