The first time I trusted a forecast, it was for something embarrassingly small: how many croissants we’d sell at a pop-up coffee bar I helped a friend run. I built a tiny spreadsheet “model,” ignored weather data, and we ran out by 10 a.m.—which felt like a win until we realized we’d left money (and some grumpy customers) on the table. That’s the moment predictive analytics clicked for me: forecasts aren’t fortune-telling; they’re a way to make better bets with the information you actually have. In business, those bets show up as inventory, staffing, churn prevention, pricing, and expansion timing. This post is my field guide to predictive analytics for business growth—part strategy, part cautionary tale, and part “here’s what I’d do if I had to rebuild the whole thing next quarter.”

1) Predictive Analytics: the “better bets” mindset

Predictive Analytics is how I use past and current data to estimate what’s likely to happen next—so I can place better bets in my business. It’s not a crystal ball, and it’s not “AI that knows the future.” It’s a structured way to turn uncertainty into probabilities that support Decision Making.

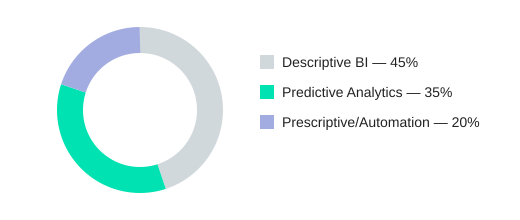

I like to think of Business Intelligence as the reporting layer: dashboards tell me what happened. Predictive models build on that to suggest what might happen. And when I connect predictions to actions, I’m moving toward prescriptive analytics: what to do to improve Business Outcomes.

My croissant lesson: forecasts fail without context

I once tried to forecast bakery demand (yes, croissants) using only sales history. The model looked “smart” on my dashboard—until a rainy week hit. Foot traffic dropped, and my forecast missed badly. The problem wasn’t math; it was missing context (weather, local events, seasonality). That’s why being Data Driven also means being context-aware, not dashboard-blind.

Where Predictive Analytics actually drives Business Outcomes

- Demand: set reorder points, pricing tests, and inventory buffers.

- Churn: trigger outreach when a customer’s risk score rises.

- Fraud/risk prevention: flag unusual patterns early.

- Staffing: schedule based on expected volume, not gut feel.

Right now, predictive analytics is converging with AI in a useful way: not just predicting outcomes, but enabling prevention and earlier intervention (for example, detecting churn signals sooner so I can act before revenue is lost).

A quick litmus test for decision sensitivity

I ask: would I change a business decision if the prediction moved by ±10%? If not, the model may be interesting—but not operational.

| Analytics type | Question | Example decision (±10% demand) |

|---|---|---|

| Descriptive (BI) | What happened? | Sales report shows last week’s volume |

| Predictive | What might happen? | If forecast rises 10% → reorder “yes”; if drops 10% → reorder “no” |

| Prescriptive | What should we do? | Auto-reorder + adjust safety stock rules |

Statistical thinking as a habit

I try to think in base rates, confidence intervals, and “what would change my mind?” It’s like packing for a trip: I bring a jacket because rain is possible, not certain.

“The purpose of a model is to help you make a better decision, not to be right about the future.” — Cassie Kozyrkov

2) Data Quality is the unglamorous growth lever (Data Foundations)

I learned this the hard way during a “duplicate customers” fiasco. We had about 2% duplicate customer records, and it quietly polluted our churn model. The algorithm “saw” two customers where there was one, counted cancellations twice, and inflated churn. The result: a misallocated retention budget and sales reps chasing the wrong accounts inside the CRM. That’s when I stopped treating Data Quality as cleanup work and started treating it as growth insurance.

Thomas C. Redman: “Where there is data smoke, there is business fire.”

Data Quality management checklist (the boring stuff that makes predictions work)

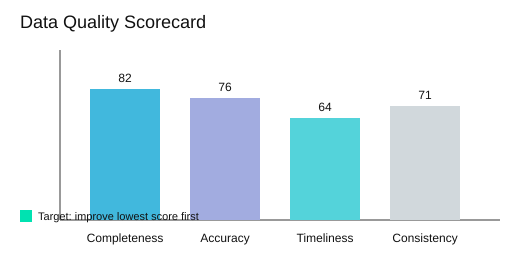

Strong Data Foundations come from repeatable checks, not heroics. I score four dimensions (0–100) and review them like a KPI:

- Completeness: Are required fields filled (email, segment, lifecycle stage)?

- Accuracy: Do values match reality (addresses, prices, status codes)?

- Timeliness (real time): Is the data fresh enough for real-time analytics, or is it stale?

- Consistency: Do systems agree (CRM vs ERP vs billing)?

| Dimension | Score (0–100) |

|---|---|

| Completeness | 82 |

| Accuracy | 76 |

| Timeliness | 64 |

| Consistency | 71 |

| Operational impact | 2% duplicate customer records → inflated churn rate and misallocated retention budget |

Data Strategy mini-plan: define core entities once

My simplest Data Strategy move is to pick three entities and define them once: customer, order, product. Write definitions, owners, and key fields, then enforce them across tools. This is also where light governance starts: naming rules, validation, and a single source of truth.

Data Democratization with Data Security (not a free-for-all)

Data Democratization improves user experience for analysts and stakeholders, but only with Data Security: role-based access, audit logs, and masked sensitive fields. Predictions embedded into CRM/ERP workflows only work when upstream data is trustworthy.

My practical rule: if I can’t explain a metric to a new hire, I don’t automate a decision with it yet.

3) From Business Intelligence to Market Intelligence (and back again)

How I hand off from Business Intelligence to prediction

I use Business Intelligence dashboards to spot patterns fast: a dip in conversion, a spike in returns, a region that suddenly grows. But dashboards mostly show what happened. Predictive models help me test why it happened and project what might happen next. That’s where real Business Insights start to form.

Avinash Kaushik: “All data in aggregate is crap.”

That quote reminds me to segment before I act—by product, region, channel, or customer type—so I don’t “fix” the wrong problem.

Market Intelligence use cases I rely on

Once BI flags a change, I shift into Market Intelligence to explain it with outside signals. Common use cases:

- Competitor pricing signals: price drops, bundles, shipping promos.

- Regional demand shifts: weather, local events, new store openings.

- Seasonality: earlier peaks, longer tails, or category swaps.

This is also where Operational Insight matters. I separate lagging indicators (revenue, churn) from leading indicators (site search terms, quote requests, cart adds). Leading indicators give me time to respond.

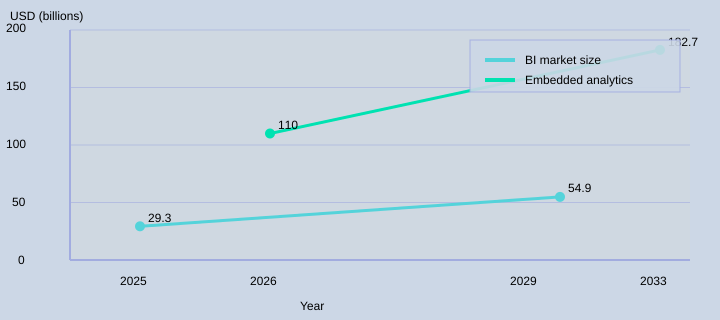

Analytics Trends 2026: embedded analytics everywhere

One of the biggest Analytics Trends 2026 I see is insights moving into the tools people already use—CRM, support desks, inventory systems. The embedded analytics market is projected to reach USD 182.7B by 2033 (12.82% CAGR). That matters because “insight in the workflow” is more likely to trigger action than a dashboard tab no one opens.

My simple workflow (what fixed my “pretty dashboards” problem)

I’ve built plenty of pretty dashboards that changed nothing. What fixed it: a clear decision owner, one KPI, and a defined action.

- Weekly BI review highlights a pattern.

- I write a hypothesis in one line.

- I run a lightweight model to test and forecast.

- We make a decision (price, budget, inventory, messaging).

I trust dashboards most when they argue with my assumptions—because that’s when I know I’m learning, not just reporting.

| Market | Projection | Growth |

|---|---|---|

| Business intelligence | USD 29.3B (2025) → USD 54.9B (2029) | 13.1% CAGR |

| Embedded analytics | USD 182.7B by 2033 | 12.82% CAGR |

Even in a predictive era, BI still matters: the BI market is projected to grow from USD 29.3B in 2025 to USD 54.9B by 2029 (13.1% CAGR). For me, that growth makes sense—prediction needs a strong BI foundation.

4) Real Time + Edge Computing: when speed becomes strategy

In predictive analytics, I’ve learned that Real Time isn’t a nice-to-have—it’s a growth lever and a form of Risk Prevention. It matters most when a late decision is the same as a wrong decision: fraud detection, logistics rerouting, dynamic pricing, and customer support triage. If my AI Systems can spot a signal but can’t act fast, I’m paying for insight I can’t use.

Edge Computing (my cousin version)

I explain Edge Computing like this: instead of sending every data point to the cloud and waiting, we compute closer to where the data happens—stores, warehouses, vehicles, kiosks, or factory lines. This trend is growing because companies want latency reduction and more resilience when networks wobble.

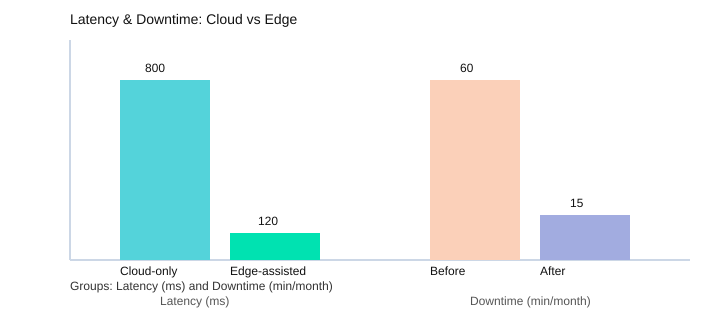

Latency reduction + resilience: “fast enough” wins

Real-time models don’t need to be perfect; they need to be fast enough to change outcomes. For example, cloud-only processing might take 800ms, while edge-assisted decisions can drop to 120ms. That difference can stop a fraudulent card swipe or prevent a missed delivery window.

Werner Vogels: “Everything fails, all the time.”

That quote is why I like edge buffering. When the connection drops, edge devices can keep working and sync later—cutting downtime from 60 minutes/month to 15 minutes/month in a realistic setup.

| Metric | Option A | Option B |

|---|---|---|

| Latency (ms) | Cloud-only: 800 | Edge-assisted: 120 |

| Downtime (min/month) | Before edge buffering: 60 | After edge buffering: 15 |

Hypothetical scenario: retail reacts to a heatwave

Imagine a retail chain sees a heatwave forecast plus rising local searches. With real-time sales and inventory signals at the edge, the system shifts replenishment and pricing within hours—moving fans, water, and sunscreen to the right stores the same day, not weeks later. That’s Operational Insight turning into revenue.

Feasible mid-market use cases

- Customer support triage: route high-risk churn tickets instantly using real-time sentiment + account signals.

- Logistics alerts: edge rules detect temperature or delay risks and trigger actions before spoilage or SLA breaches.

Guardrails: speed can amplify bad data

Real time can also speed up mistakes. I add guardrails: data quality checks, confidence thresholds, rate limits, and a human override for high-impact actions. Fast decisions are only strategic when they’re safely controlled.

5) Autonomous Analytics & Process Automation: the promise (and the trap)

Autonomous Analytics is where predictive models don’t just forecast outcomes—they execute multi-step Business Processes based on those forecasts. This shift is accelerating as more teams adopt Agentic AI that can plan, decide, and act across tools (CRM, ERP, email, ticketing) with less human effort. As Andrew Ng put it:

“AI is the new electricity.”

What it looks like in real Business Processes

- Automated replenishment: predict stockouts, place orders, and update inventory targets.

- Lead scoring + outreach: score leads, route to the right rep, and draft follow-ups.

- Invoice anomaly detection: flag unusual charges, open a case, and request approval.

I like the speed and consistency of Process Automation, but I’m cautious. The “trap” is letting automation move faster than your governance. I learned this the hard way when an automated pricing rule got too aggressive with discounting. It boosted short-term conversions, but it trained customers to wait for deals and upset a few long-time accounts. What I changed: tighter constraints (minimum margin floors), approval thresholds, and audit logs that made every automated action explainable.

Agentic AI + Conversational AI: powerful, but supervised

Conversational AI (a conversational interface) is the practical layer I use to make analytics usable: I can ask, “Which accounts are likely to churn this month?” and get an answer plus recommended actions. But with Agentic AI, I still don’t let it email customers unsupervised (yet). Drafting is fine; sending is a different risk category.

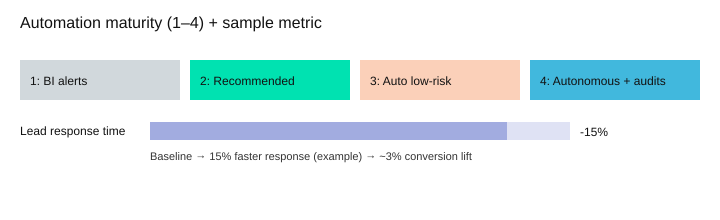

Design pattern: automate the decision, not the spreadsheet

- Human-in-the-loop thresholds: auto-execute low-risk actions; escalate high-impact ones.

- Escalation paths: route exceptions to the right owner fast.

- Audit logs: who/what/why for every action, so mistakes don’t become mysteries.

| Level | Automation maturity ladder | Example outcome |

|---|---|---|

| 1 | BI alerts | Notify sales of slow lead response |

| 2 | Recommended actions | Suggest next-best outreach |

| 3 | Auto-execute low-risk | Auto-assign leads; schedule follow-up |

| 4 | Fully autonomous with audits | End-to-end execution with controls |

| Hypothetical impact: 15% faster lead response time → 3% lift in conversion rate | ||

6) AI Governance + ROI Measurement: the grown-up part of Predictive Analytics

When I use Predictive Analytics to make better bets, I also need better tracking of results. That’s where AI Governance and ROI Measurement stop being “extra work” and become the part that makes growth repeatable.

AI Governance keeps AI Systems trustworthy (not slow)

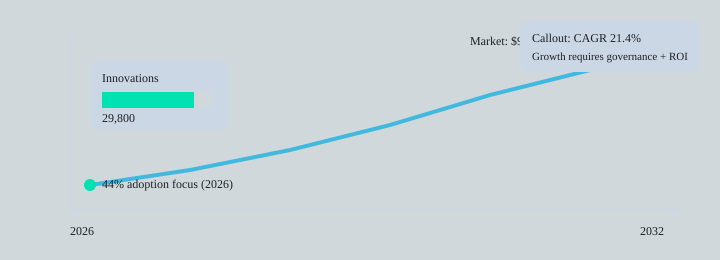

I don’t treat governance as red tape. I treat it as the rules that keep AI Systems safe, fair, and stable as they scale. With the predictive analytics market racing toward USD 94.8B by 2032 at a 21.4% CAGR, and expanding at 11.04% yearly growth with 29,800 innovations, the risk isn’t “no model.” The risk is “too many models, not enough control.” Governance means clear data access, versioning, monitoring drift, and data security (who can see what, how it’s stored, and how it’s audited).

ROI Measurement that doesn’t feel fake

In my Business Strategy, I measure ROI like I would any investment: I set a baseline, run a pilot, and compare against a control group when possible. I also define a time horizon up front, because some wins show up fast (conversion lift) while others lag (churn reduction). This matters even more because AI accountability and ROI are expected to dominate predictive analytics trends in 2026 as budgets tighten and “prove it” becomes mandatory.

Accountability: who owns what?

I assign ownership in three layers: the data owner (quality and permissions), the model owner (performance and monitoring), and the decision owner (how predictions change actions). Without that, “the model said so” becomes an excuse instead of accountability.

Satya Nadella: “AI is the defining technology of our times.”

| Metric | Insight |

|---|---|

| Market size | USD 94.8B by 2032 |

| Growth | 21.4% CAGR |

| Innovation pace | 11.04% yearly growth; 29,800 innovations |

| 2026 adoption intent | 44% plan AI implementation focused on predictive analytics |

To close this out, I keep a small ritual: a quarterly model review that feels like a financial audit, but kinder. We check performance, bias signals, security access, and whether the prediction still maps to a real business decision. Sustainable growth comes from repeatable decision loops, not one heroic model that nobody can explain or measure.

TL;DR: Predictive analytics drives business growth when you pair clean data foundations with business intelligence, real-time decision loops, and clear ROI measurement—plus governance that keeps AI systems accountable.