I remember the day my product team realized a simple pricing tweak triggered unexpected regulatory attention — and that was before a flurry of new AI laws landed. In this post I walk you through the regulatory wave I’ve been tracking: what’s coming, what matters to your firm, and how to build pragmatic defenses without stifling innovation.

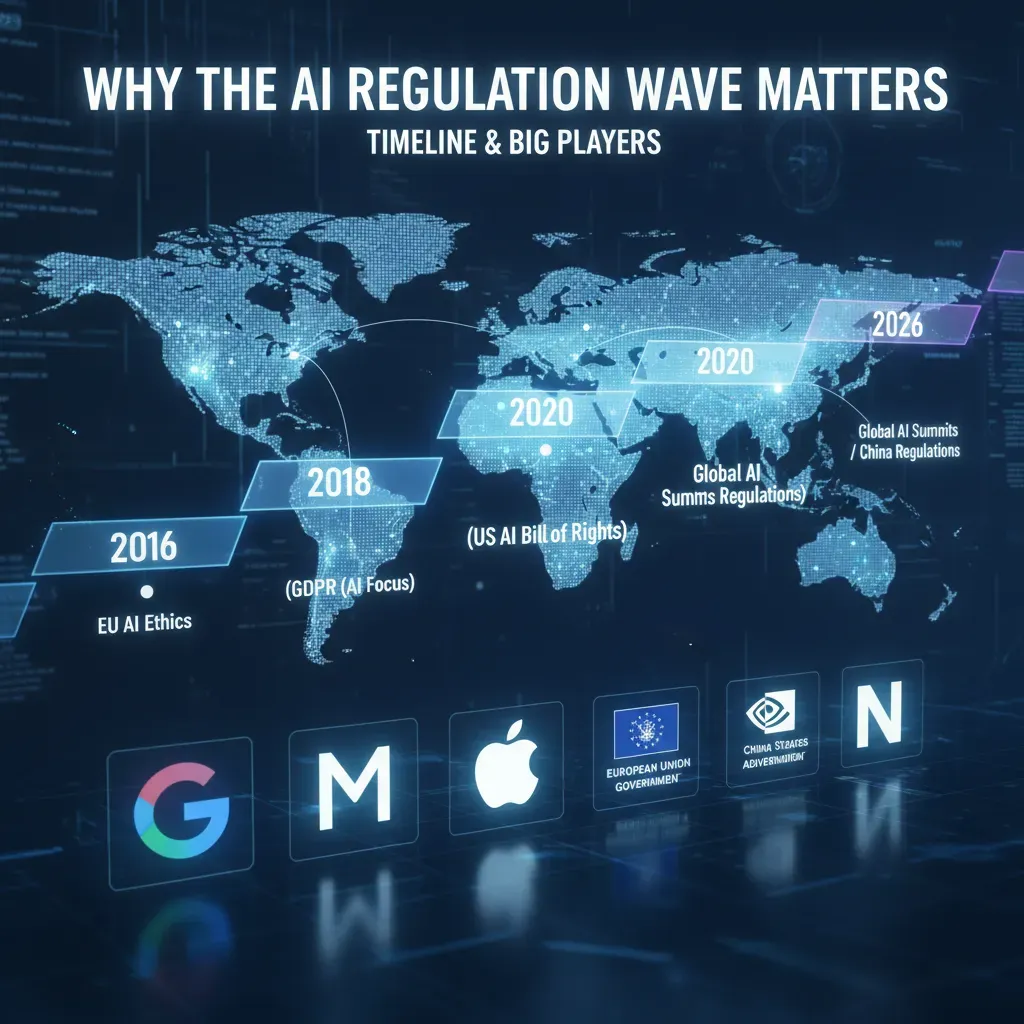

Why the AI Regulation Wave Matters: Timeline & Big Players

When I look at the AI Regulation Wave, what stands out is how fast it is moving from “future risk” to “operating reality.” For businesses, this isn’t just a legal topic. It affects product design, vendor choices, marketing claims, and even which markets you can enter without delays.

My quick timeline (what I’m watching)

- EU AI Act: Phasing in through 2026, with requirements rolling out in stages. If you sell into the EU (or serve EU users), this timeline matters now, not later.

- U.S. state laws: I’m tracking multiple state-level AI rules that become effective on Jan 1, 2026. That date is close in business planning terms, especially if you need new documentation, testing, or disclosures.

- Federal shift via Executive Order: A new federal Executive Order is changing priorities and signaling how agencies may enforce expectations around safety, transparency, and accountability.

Big regulatory players shaping business expectations

In practice, the “rules” aren’t coming from one place. They’re coming from a mix of states, the EU framework, and federal agencies. The players I see driving the most momentum include:

- California: Proposals and laws like the AI Safety Act and TFAIA are setting a tone other states may follow.

- Colorado: A key state to watch for how it frames duties and consumer protections tied to automated systems.

- Texas (RAIGA): Another major signal that large states want their own approach to AI governance.

- EU framework: A structured, risk-based model that can force global companies to standardize controls.

- Federal agencies: Acting through Executive Order direction, guidance, and enforcement priorities.

What surprised me (and why it matters)

The biggest “wake up” detail for me: California now requires disclosures for frontier developers with revenue over $500M. That’s a clear threshold companies must watch. It also creates a new planning problem: if you’re near that line (or expect to cross it), you may need compliance work before you hit the number.

Practical takeaway: map laws to products now

If I were advising a team today, I’d start with a simple jurisdiction map: where you operate, where your users are, and where your vendors run models. Waiting is a strategic risk because compliance tasks often look like:

- Updating disclosures and user notices

- Documenting training data and model limits

- Adding testing, monitoring, and incident response

Spotting High‑Risk Systems and Compliance Triggers

When I help business teams prepare for the AI Regulation Wave, I start with one practical question: Where are we using automated decisions that can change someone’s life, money, or safety? Regulators often focus less on “AI” as a buzzword and more on impact. If your system makes or supports decisions, you should assume it may trigger compliance duties.

Flag automated decision systems in sensitive areas

I tell teams to flag any Automated Decision Systems that touch:

- Safety (workplace risk scoring, medical triage, fraud blocks that affect access)

- Employment (screening, ranking, scheduling, performance scoring, termination support)

- Finance (credit decisions, underwriting, collections prioritization)

- Public services (eligibility, benefits, housing, education access)

These are common “high‑risk” zones because errors and bias can cause real harm. Even if a human signs off, regulators may still treat the system as high impact if people rely on it.

Training data transparency is a compliance trigger

Training Data Transparency matters because regulators will ask: Where did the data come from, did you have consent, and can you trace it? I recommend documenting:

- Data sources (vendor, public, first‑party, scraped)

- Rights and consent status (licenses, user consent, opt‑outs)

- Lineage (how data moved, changed, and was labeled)

A simple internal record can be enough to start. I often use a lightweight “data card” format so teams can update it as models evolve.

Impact assessments: start simple, then iterate

Impact assessments are not optional in some places. Colorado is a clear example where certain AI uses can require documented risk management steps. I advise teams to begin with a basic template:

- What decision is being made and who is affected?

- What could go wrong (bias, errors, security, misuse)?

- What controls exist (human review, testing, appeals)?

Wild card: pricing algorithms can be a red flag

One surprise trigger I watch closely is dynamic pricing. Pricing algorithms are explicitly targeted in some jurisdictions, including updates tied to California’s Cartwright Act. If your system adjusts prices per user, location, or demand signals, I treat it as a compliance hotspot.

“If it changes access, opportunity, or price, assume scrutiny—and document your choices.”

Building a Business‑Ready Compliance Program

When I think about preparing for the AI Regulation Wave, I don’t start with a single policy document. I build a program that can survive audits, product changes, and new laws. In practice, I recommend a three‑layer compliance program that connects leadership decisions to day‑to‑day engineering work.

Layer 1: Governance (clear policy and ownership)

Governance is where I set the rules: what AI we allow, what we don’t, and who is accountable. I assign an executive owner, define risk tiers (low/medium/high), and require sign‑off for high‑impact uses like hiring, credit, health, or safety.

- AI policy with approved use cases, prohibited uses, and data handling rules

- Roles: product owner, model owner, data steward, legal reviewer

- Training for teams that build or buy AI tools

Layer 2: Technical controls (audits, logs, and evidence)

Regulators and customers will ask, “Prove it.” This is why I treat logging and testing as compliance tools, not just engineering hygiene. I want to show how a model was trained, what data it used, and how it behaves over time.

- Audit trails for model changes, prompts, and deployments

- Monitoring for drift, bias signals, and safety incidents

- Access controls for sensitive datasets and model endpoints

“If you can’t produce evidence quickly, you don’t have a compliance program—you have good intentions.”

Layer 3: Operational processes (repeatable workflows)

Operational processes make compliance real. I run AI impact assessments before launch, and I update vendor contracts so suppliers meet the same standards. This is also where I prepare for disclosure duties that may come with frontier or high‑risk systems.

- Pre‑deployment impact assessment (purpose, users, risks, mitigations)

- Vendor review (data rights, security, incident reporting, audit support)

- Incident response playbook (who triages, who reports, timelines)

Maintain a public AI inventory

Some state laws require an AI inventory, and even when they don’t, it’s the fastest way I’ve found to reduce chaos. I catalogue models, data sources, owners, and deployment contexts.

| Item | Example |

| Model | Resume screening classifier v3 |

| Data source | Applicant forms + job history |

| Owner | HR tech product lead |

| Context | Hiring recommendations (not final decisions) |

HR oversight, whistleblowers, and disclosures

I don’t ignore HR. Whistleblower channels and retaliation protections matter, and California’s AI Safety Act has pushed this topic into the spotlight. Finally, I plan for disclosure requirements early and coordinate PR, legal, and engineering so messaging matches the technical facts—before the first regulator or reporter asks.

Competitive, Antitrust & Labor Risks: Pricing Algorithms and Employment AI

When people talk about the AI Regulation Wave, they often focus on privacy or model safety. In my work, I’ve seen a different pressure point: competition, antitrust, and labor rules. The same AI that helps a business move faster can also look, to regulators, like a tool for unfair pricing or biased hiring.

Pricing algorithms: fast gains, fast scrutiny

I’ve seen pricing algorithms trigger antitrust scrutiny because they can push markets toward “quiet coordination,” even when no one intends to collude. This risk is getting more concrete: California is explicitly curbing common pricing algorithms starting in 2026. If your revenue team relies on dynamic pricing, this is not a “later” problem.

What makes it tricky is that antitrust oversight and consumer protection laws can collide. A pricing model might be legal from one angle (e.g., reacting to demand) but still raise concerns if it looks deceptive, discriminatory, or designed to exploit vulnerable customers. That’s why I tell teams to lawyer up early—not to slow down, but to design guardrails before the model is embedded in core systems.

Employment AI: state rules are tightening

Hiring, promotion, scheduling, and termination tools are also under pressure. Employment decision tools are under new state restrictions (e.g., Illinois) aimed at preventing algorithmic discrimination. Even if your vendor says the tool is “bias-tested,” you may still be responsible for how it’s used, what data feeds it, and whether humans can challenge outcomes.

- Document what the tool does and what it must never do (protected traits, proxies, retaliation risk).

- Test for disparate impact using real workflow data, not just vendor demos.

- Keep humans accountable: define when reviewers must override the model.

A practical analogy: treat pricing models like “regulated utilities”

One mindset that helps is treating pricing models like regulated utilities: they can operate, but they need clear rules, monitoring, and explainability. I like to ask teams to build “rate-card style” controls:

| Control | Why it matters |

| Explainable inputs | Reduces suspicion of hidden coordination or unfair targeting |

| Change logs | Shows who changed pricing logic and when |

| Audit triggers | Flags unusual price jumps or patterns across competitors |

“If we can’t explain why the model set that price or rejected that applicant, we’re not ready for the AI Regulation Wave.”

Frontier Models, Global Standards, and Scenario Planning

Tabletop exercises for a “frontier model” disclosure request

When I prepare for the AI Regulation Wave, I start with tabletop exercises. I pick a realistic trigger: a regulator asks for a disclosure about a frontier model we use or fine-tune. The first question I ask my team is simple: who owns the logs and who talks to regulators? If we cannot answer that in one minute, we are not ready.

I also test the basics: where prompts and outputs are stored, how long we keep them, and whether we can produce an audit trail without scrambling. In these drills, I assign roles (legal, security, product, vendor manager) and practice a clean handoff so we do not send mixed messages.

“In a disclosure request, speed matters, but accuracy matters more. Logs without context can create risk.”

Global standards are shaping local expectations

Even if my company is U.S.-based, global standards influence what customers and regulators expect. The EU AI Act is a major force, and it is pushing U.S. norms in areas like documentation, risk controls, and transparency. For cross-border companies, the practical move is to harmonize compliance practices so we are not running one program for Europe and another for everyone else.

I keep a simple mapping of requirements across regions so teams can build once and reuse:

| Area | What I standardize |

| Documentation | Model cards, data sources, change logs |

| Controls | Access limits, monitoring, incident response |

| Transparency | User notices, escalation paths, audit readiness |

U.S. proposals: budget for safety and legal review

In the U.S., proposals like the RAISE Act and the Frontier AI Act put attention on catastrophic risks and developer obligations. I plan for this by budgeting time and money for legal review, safety testing, and documentation. Even if the final rules change, the direction is clear: more proof, more process, and more accountability.

Wild card: vendor models and inherited liability

One scenario I always include: a supplier provides a model trained on poor data, or with weak controls. Under the AI Regulation Wave, I could inherit liability through my product. That is why vendor clauses matter.

- Data and training disclosures (what was used, what was excluded)

- Audit and incident rights (access to evidence when issues happen)

- Indemnities and limits tied to model misuse or hidden defects

Practical Next Steps: Checklist, Budgets, and Tabletop Scenarios

When I talk to teams about the AI Regulation Wave, the biggest risk I see is waiting for a new rule to land and then scrambling. I treat AI compliance the same way I treat emergency preparedness: you build the kit before the storm, not during it. The goal is not perfection on day one—it’s having a repeatable way to prove you are paying attention, reducing harm, and improving over time.

I end every advisory with the same short checklist because it forces clarity. First, I ask for an AI inventory: what models you use, where they sit in the product, what data they touch, and who owns them. Next, I push for impact assessments that match the real risk—especially where decisions affect people (hiring, lending, pricing, healthcare, education). Then I look at vendor clauses, because many businesses rely on third-party AI and still carry the accountability. I also recommend whistleblower channels that employees trust, plus disclosure playbooks so you know what to say if customers, regulators, or the press ask how an algorithm works and how you manage bias.

Budgeting is where good intentions often fail. My practical hint is to start with a pilot compliance budget, then scale once you learn where the gaps are. I usually involve legal, product, and security from the start, because AI regulation touches contracts, user experience, data handling, and incident response all at once. Even a small pilot can fund basic documentation, model monitoring, and a lightweight review process that becomes your foundation as the AI Regulation Wave accelerates.

Finally, I run tabletop exercises quarterly. I simulate a regulator inquiry (“Show us your inventory, assessments, and controls”) or a public complaint about algorithmic bias (“A customer says your system treated them unfairly”). In the exercise, I watch how fast the team can find evidence, who speaks, what gets escalated, and where the story breaks down. Those weak spots are exactly what you fix next.

If you do only one thing after reading this, make it this: treat AI compliance like preparedness. Build the kit now—inventory, assessments, contracts, reporting paths, and disclosures—so when the wave hits, you are steady, credible, and ready to respond.

AI rules are arriving fast (notably laws effective in 2026). Identify high‑risk systems, run impact assessments, disclose frontier work, and build flexible compliance programs — I outline timelines, checklist items, and scenario plans.